AI is no longer a novelty in hiring. Candidates are openly admitting to using ChatGPT to draft resumes, prep interview answers, and even simulate mock interviews. On the other side, recruiters are testing AI recruiting tools to screen resumes, generate job descriptions, and analyze interview transcripts.

The result? A strange arms race where AI-written applications meet AI-enabled hiring processes. And somewhere in the middle, recruiters still need to identify the genuine humans who can actually do the job.

One of the most effective defenses against AI-generated fakery lies in a simple tool we’ve had all along: situational interview questions. And it's not just about what candidates say in interviews—structured assessments that measure personality tendencies, situational judgment, and work environment preferences are equally hard for AI to fake, because they require authentic self-reflection rather than polished scripts.

Recruiters have already sensed this in practice. AI-generated interview answers may sound polished when questions are predictable, but situational and judgment-based prompts tend to expose their weaknesses. Responses often come across as generic, formulaic, and lacking depth.

So why are situational questions so much harder for AI to fake? And how can recruiters design them to separate authentic candidates from AI-polished imposters?

Let’s break it down.

Predictable questions vs unpredictable scenarios

Most interview prep guides, even before ChatGPT, trained candidates to expect certain questions:

- What are your greatest strengths?

- Tell me about a time you worked in a team.

- Where do you see yourself in five years?

These are predictable prompts. They map neatly to training data and standard coaching frameworks. ChatGPT excels here. It has absorbed countless examples and can spin up a confident, structured answer in seconds.

Situational questions work differently. A recruiter might ask:

“Imagine you’re covering the front desk at 5 p.m. when three customers arrive at once. One is angry about a billing error, another is in a rush to pick up an order, and the third just wants directions. How do you handle it?”

That level of specificity forces a candidate to juggle priorities, show judgment, and apply practical reasoning.

When ChatGPT tackles this, it tends to fall back on stock phrases: “I would remain calm, communicate clearly, and ensure each customer feels heard.”

It sounds neat, but it lacks the messy human detail that makes an answer believable. Real candidates reference lived context: “At my last retail job, this exact thing happened during the holiday rush. I asked the rushed customer to give me two minutes, calmed the angry customer by apologizing, and waved over a colleague to give directions.”

One is grounded. The other is too textbook.

The need for context-rich detail

Strong situational answers aren’t abstract. They’re built on context. Candidates need to consider:

- Who the stakeholders are

- What the constraints are (time, budget, authority)

- What the real-world stakes feel like

For example, in a finance internship interview, a strong human answer might reference clients upset about two consecutive quarters of underperformance, and describe how the intern managed expectations while escalating to a senior advisor.

ChatGPT, by contrast, tends to float at the surface: “I would reassure the client, explain the situation clearly, and collaborate with my team to resolve the issue.”

Polite. Well-structured. But ultimately hollow.

Follow-up questions expose weak answers

Recruiters rarely stop at a single prompt. They probe.

- “What exactly did you say in that moment?”

- “Why did you choose that option instead of another?”

- “What was the outcome?”

Here’s where AI answers collapse. Because ChatGPT isn’t recalling lived experience, it can’t sustain follow-up detail. Its responses start to contradict themselves or recycle the same surface-level reasoning.

Humans, by contrast, are comfortable grounding responses in personal memory. They can give second and third layers of detail that feel coherent and authentic.

Situational questions reveal reasoning, not polish

Another reason situational questions are harder for AI: they don’t reward memorization. They test real-time problem solving.

Recruiters can see how a candidate:

- Organizes thoughts under pressure

- Balances competing priorities

- Weighs trade-offs between short-term fixes and long-term consequences

- Communicates reasoning in a natural way

This is exactly why many hiring teams using Truffle recommend weaving situational prompts into their screening process—both in async video responses and in structured assessments. Recruiters using situational prompts in their screening process consistently report the same pattern: when you ask someone about conflict and push them for a concrete example, it's a lot harder for ChatGPT to produce something that sounds convincingly human.

How to design situational questions AI can’t fake

Not all situational questions are created equal. Some are still broad enough for AI to bluff. The key is specificity and constraint.

Here are a few design principles:

- Ground the scenario in real context

Instead of “Tell me about a time you handled multiple priorities,” try: “You’re leading a shift when two employees call out sick, the register goes down, and a delivery arrives early. Walk me through your first three steps.” - Introduce constraints

Add time limits, limited resources, or authority boundaries. Candidates have to show creativity under pressure, which AI often misses. - Ask for step-by-step reasoning

Don’t just ask “What would you do?” Ask “Why would you prioritize that step first?” This exposes real thinking. - Probe for outcomes and learning

Follow up with: “What was the result?” and “What would you do differently next time?” Genuine candidates have reflections; AI tends to recycle clichés about teamwork and growth. - Blend with behavioral questions

Situational and behavioral questions work best together. Situational probes test forward-looking reasoning, while behavioral ones check for patterns in past behavior. Together, they make it very hard for AI-scripted prep to pass undetected.

These same principles apply beyond live interviews. Structured assessments—especially those measuring situational judgment, personality tendencies, and environment preferences—are equally resistant to AI coaching because they force candidates to reveal authentic patterns across dozens of scenarios, not just rehearse a single polished answer.

What this means for recruiters

The temptation for candidates to lean on ChatGPT isn’t going away. In fact, some AI-generated answers are so polished they outscore real candidates on dimensions like clarity and grammar.

That means recruiters can’t simply rely on gut instinct to “spot the AI.” Instead, they need structured defenses built into their process:

- Use situational prompts that demand practical reasoning

- Build in follow-up layers to test depth

- Combine structured scoring with human judgment

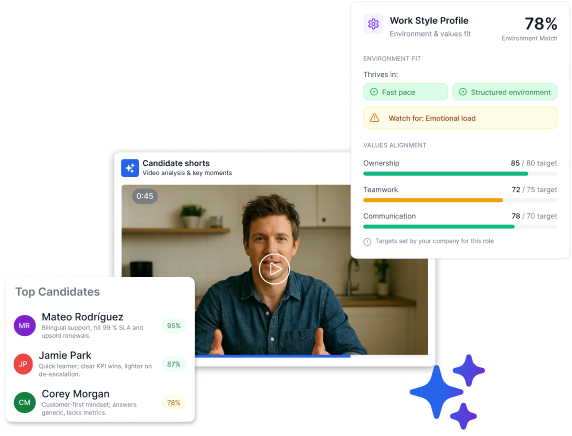

And when possible, pair situational questions with technologies that help recruiters review more efficiently. For example, Truffle’s one-way video interviews let candidates record situational answers asynchronously, while recruiters get AI-generated transcripts, match scores with reasoning, Candidate Shorts that surface key moments, and summaries that help prioritize who to interview first. That makes it possible to screen every applicant—not just the top handful—without spending your entire week on phone screens or sacrificing the depth you need to make confident decisions.

Looking ahead

As generative AI gets better, some of today’s weak spots may close. ChatGPT might learn to produce more detailed situational answers, perhaps even simulate plausible context. But for now, situational judgment remains a frontier where human reasoning shines through.

Recruiters who lean on situational prompts aren’t just catching AI cheats. They’re also doing better hiring. Because at its core, the ability to weigh messy trade-offs and adapt under pressure is exactly what predicts success in the workplace.

In a hiring landscape flooded with AI-polished resumes and pre-scripted answers, situational questions remind us of something fundamental: work is unpredictable. The best hires aren’t those with the smoothest scripts, but those who can think, decide, and act when the script runs out.

Key takeaway: Situational questions are harder for ChatGPT to fake because they demand context, detail, and reasoning under constraint. Recruiters who use them—especially in structured, video-based formats—gain a powerful advantage in cutting through the noise of AI-generated applications.

The TL;DR

AI is no longer a novelty in hiring. Candidates are openly admitting to using ChatGPT to draft resumes, prep interview answers, and even simulate mock interviews. On the other side, recruiters are testing AI recruiting tools to screen resumes, generate job descriptions, and analyze interview transcripts.

The result? A strange arms race where AI-written applications meet AI-enabled hiring processes. And somewhere in the middle, recruiters still need to identify the genuine humans who can actually do the job.

One of the most effective defenses against AI-generated fakery lies in a simple tool we’ve had all along: situational interview questions. And it's not just about what candidates say in interviews—structured assessments that measure personality tendencies, situational judgment, and work environment preferences are equally hard for AI to fake, because they require authentic self-reflection rather than polished scripts.

Recruiters have already sensed this in practice. AI-generated interview answers may sound polished when questions are predictable, but situational and judgment-based prompts tend to expose their weaknesses. Responses often come across as generic, formulaic, and lacking depth.

So why are situational questions so much harder for AI to fake? And how can recruiters design them to separate authentic candidates from AI-polished imposters?

Let’s break it down.

Predictable questions vs unpredictable scenarios

Most interview prep guides, even before ChatGPT, trained candidates to expect certain questions:

- What are your greatest strengths?

- Tell me about a time you worked in a team.

- Where do you see yourself in five years?

These are predictable prompts. They map neatly to training data and standard coaching frameworks. ChatGPT excels here. It has absorbed countless examples and can spin up a confident, structured answer in seconds.

Situational questions work differently. A recruiter might ask:

“Imagine you’re covering the front desk at 5 p.m. when three customers arrive at once. One is angry about a billing error, another is in a rush to pick up an order, and the third just wants directions. How do you handle it?”

That level of specificity forces a candidate to juggle priorities, show judgment, and apply practical reasoning.

When ChatGPT tackles this, it tends to fall back on stock phrases: “I would remain calm, communicate clearly, and ensure each customer feels heard.”

It sounds neat, but it lacks the messy human detail that makes an answer believable. Real candidates reference lived context: “At my last retail job, this exact thing happened during the holiday rush. I asked the rushed customer to give me two minutes, calmed the angry customer by apologizing, and waved over a colleague to give directions.”

One is grounded. The other is too textbook.

The need for context-rich detail

Strong situational answers aren’t abstract. They’re built on context. Candidates need to consider:

- Who the stakeholders are

- What the constraints are (time, budget, authority)

- What the real-world stakes feel like

For example, in a finance internship interview, a strong human answer might reference clients upset about two consecutive quarters of underperformance, and describe how the intern managed expectations while escalating to a senior advisor.

ChatGPT, by contrast, tends to float at the surface: “I would reassure the client, explain the situation clearly, and collaborate with my team to resolve the issue.”

Polite. Well-structured. But ultimately hollow.

Follow-up questions expose weak answers

Recruiters rarely stop at a single prompt. They probe.

- “What exactly did you say in that moment?”

- “Why did you choose that option instead of another?”

- “What was the outcome?”

Here’s where AI answers collapse. Because ChatGPT isn’t recalling lived experience, it can’t sustain follow-up detail. Its responses start to contradict themselves or recycle the same surface-level reasoning.

Humans, by contrast, are comfortable grounding responses in personal memory. They can give second and third layers of detail that feel coherent and authentic.

Situational questions reveal reasoning, not polish

Another reason situational questions are harder for AI: they don’t reward memorization. They test real-time problem solving.

Recruiters can see how a candidate:

- Organizes thoughts under pressure

- Balances competing priorities

- Weighs trade-offs between short-term fixes and long-term consequences

- Communicates reasoning in a natural way

This is exactly why many hiring teams using Truffle recommend weaving situational prompts into their screening process—both in async video responses and in structured assessments. Recruiters using situational prompts in their screening process consistently report the same pattern: when you ask someone about conflict and push them for a concrete example, it's a lot harder for ChatGPT to produce something that sounds convincingly human.

How to design situational questions AI can’t fake

Not all situational questions are created equal. Some are still broad enough for AI to bluff. The key is specificity and constraint.

Here are a few design principles:

- Ground the scenario in real context

Instead of “Tell me about a time you handled multiple priorities,” try: “You’re leading a shift when two employees call out sick, the register goes down, and a delivery arrives early. Walk me through your first three steps.” - Introduce constraints

Add time limits, limited resources, or authority boundaries. Candidates have to show creativity under pressure, which AI often misses. - Ask for step-by-step reasoning

Don’t just ask “What would you do?” Ask “Why would you prioritize that step first?” This exposes real thinking. - Probe for outcomes and learning

Follow up with: “What was the result?” and “What would you do differently next time?” Genuine candidates have reflections; AI tends to recycle clichés about teamwork and growth. - Blend with behavioral questions

Situational and behavioral questions work best together. Situational probes test forward-looking reasoning, while behavioral ones check for patterns in past behavior. Together, they make it very hard for AI-scripted prep to pass undetected.

These same principles apply beyond live interviews. Structured assessments—especially those measuring situational judgment, personality tendencies, and environment preferences—are equally resistant to AI coaching because they force candidates to reveal authentic patterns across dozens of scenarios, not just rehearse a single polished answer.

What this means for recruiters

The temptation for candidates to lean on ChatGPT isn’t going away. In fact, some AI-generated answers are so polished they outscore real candidates on dimensions like clarity and grammar.

That means recruiters can’t simply rely on gut instinct to “spot the AI.” Instead, they need structured defenses built into their process:

- Use situational prompts that demand practical reasoning

- Build in follow-up layers to test depth

- Combine structured scoring with human judgment

And when possible, pair situational questions with technologies that help recruiters review more efficiently. For example, Truffle’s one-way video interviews let candidates record situational answers asynchronously, while recruiters get AI-generated transcripts, match scores with reasoning, Candidate Shorts that surface key moments, and summaries that help prioritize who to interview first. That makes it possible to screen every applicant—not just the top handful—without spending your entire week on phone screens or sacrificing the depth you need to make confident decisions.

Looking ahead

As generative AI gets better, some of today’s weak spots may close. ChatGPT might learn to produce more detailed situational answers, perhaps even simulate plausible context. But for now, situational judgment remains a frontier where human reasoning shines through.

Recruiters who lean on situational prompts aren’t just catching AI cheats. They’re also doing better hiring. Because at its core, the ability to weigh messy trade-offs and adapt under pressure is exactly what predicts success in the workplace.

In a hiring landscape flooded with AI-polished resumes and pre-scripted answers, situational questions remind us of something fundamental: work is unpredictable. The best hires aren’t those with the smoothest scripts, but those who can think, decide, and act when the script runs out.

Key takeaway: Situational questions are harder for ChatGPT to fake because they demand context, detail, and reasoning under constraint. Recruiters who use them—especially in structured, video-based formats—gain a powerful advantage in cutting through the noise of AI-generated applications.

Try Truffle instead.