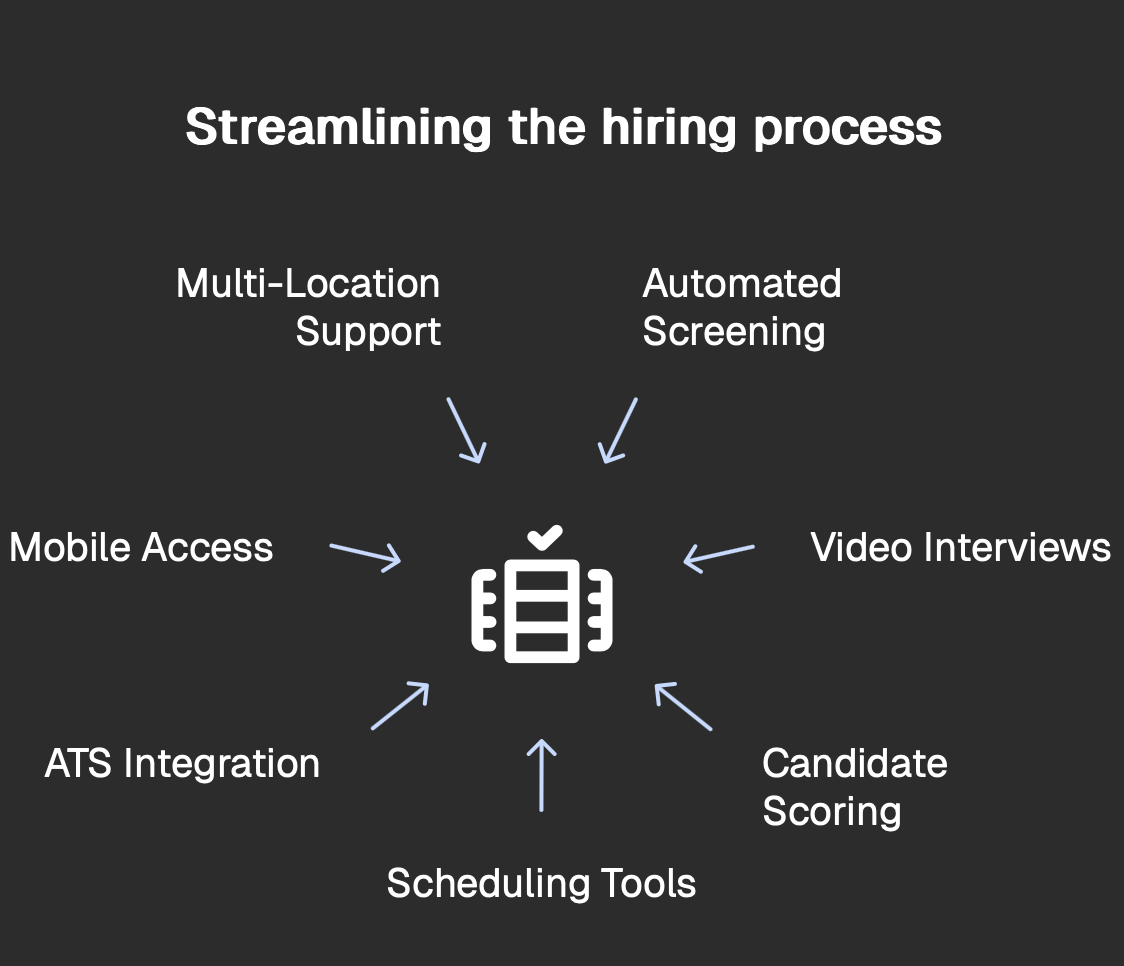

Automated recruiting workflows can make hiring faster and more consistent. It can also amplify yesterday’s patterns if you let historical data drive today’s decisions without controls. This guide shows how to build a fair, compliant workflow that keeps humans in charge while using automation for speed.

We are building Truffle to help small teams screen better, not to replace judgment. Throughout, we reference widely shared viewpoints in HR and policy circles and translate them into practical steps for SMB hiring teams.

⚠️ This advice is for informational purposes only and is neither intended as nor should be substituted for consultation with appropriate legal counsel and/or your organization’s regulatory compliance team.

Start with job relatedness

The strongest guardrail is simple. Tie every automated step to the work.

Begin by writing a one page success profile for the role. Spell out what success looks like in months 1 to 12 and the specific behaviors that make it happen. Use structured screening questions that map directly to those outcomes. Score the content of answers against clear criteria instead of proxies like school, zip code, or a perfectly designed resume.

Automation should rank and summarize. People should decide. Treat scores as triage that helps you spend time on the right candidates, not as verdicts.

Design fairness into the flow

Bias sneaks in through features that look predictive but are really historical shortcuts. Keep your feature set anchored to the job. Avoid weak or sensitive signals and focus on evidence of skills, context, and problem solving.

Calibrate regularly. Review score distributions and pass through rates by cohort to spot unexplained gaps. If you use knockout rules, confirm they reflect bona fide job requirements and retest them when the role or market changes.

For presentation effects, consider when audio is better than video. Some teams start with audio to emphasize substance over performance, then add video later for roles that require customer presence.

Keep a human in the loop where judgment matters

Recruiting automation software is excellent at removing noise. It is not a replacement for discernment.

For example, with Truffle we recommend you use match % and summaries, as well as your judgement. Set reasonable retake and time limits. Too many retakes can reward scripting. Too few can penalize nerves. Aim for a balanced, real world signal.

Make explainability the default

If you cannot explain why someone advanced, you have a governance gap.

Preserve the evidence. Store transcripts, question prompts, scoring rubrics, and reviewer notes. Prefer interpretable criteria such as role alignment, motivation, problem understanding, and communication clarity. Ask reviewers to add a short rationale when they move someone forward or decline. That simple habit creates a defensible trail without slowing you down.

Respect privacy and retention

Collect only what you need to evaluate the role. Set sensible retention windows that are long enough to audit decisions and short enough to minimize risk. Communicate these rules to candidates in plain language.

If you hire in Europe, apply GDPR principles. Limit purpose, control access, and honor candidate rights. When you share interviews with broader panels or clients, remove direct identifiers where feasible so people evaluate the content, not identity.

Be explicit about what automation will not do

Set expectations with managers and candidates. Do not use fully automated rejections. Use AI flags for review, not as automatic disqualifiers. Avoid novelty features that cannot be explained. The goal is a clear, auditable process that any reasonable person can understand.

Train teams on responsible use

Tools are only as fair as the people using them.

Teach what the score means. A high score is a strong reason to review, not a command to hire. Standardize pass thresholds by role so similar candidates get similar outcomes. Encourage structured feedback that mirrors the scoring criteria to strengthen your validation loop over time.

Hold vendors to a clear standard

Ask every hiring software provider the same core questions.

Which features influence scores. How questions map to job criteria. What audit artifacts you can export. How retention, consent, and regional hosting are handled. Whether knockouts can be edited or disabled. How explanations are shown to users and reviewers.

Choose partners who show their work. If you cannot see the ingredients, you cannot stand behind the outcome.

A quick checklist to put in play this week

- Write the role success profile and share it with every interviewer

- Use 4 to 6 job linked questions with scored rubrics

- Set retake and timing rules for fairness and realism

- Enable transcripts and require short reviewer notes

- Review pass rates by cohort once a month

- Publish retention rules candidates can understand

- Provide a contact point for appeals or corrections

- Confirm humans review all adverse decisions

The business impact

Operationalizing fairness does more than reduce risk. It increases confidence, speeds decisions, and improves candidate experience. Teams that pair automation with transparent controls spend less time on noise and more time on judgment, which is where great hiring still happens.