If we have ever wondered whether AI can run a first round interview without hurting quality or candidate experience, we finally have large scale evidence. A new natural field experiment with 70,000 applicants compared human recruiters with AI voice interviewers and found that AI lifted job offers, job starts, and 30 day retention while keeping satisfaction steady. In plain terms, the study found that structured, AI-assisted interviews surfaced stronger signal for human decision-makers without degrading candidate experience.

Below we translate the study into practical playbooks for lean teams. We highlight where AI interviews shine, where they can fail if left on autopilot, and how to build a simple, defensible workflow that gives hiring managers stronger signal with less effort.

The research at a glance

The study is a large natural field experiment, not a lab simulation. Applicants were randomly assigned to one of three paths: interviewed by a human, interviewed by an AI voice agent, or given a choice between the two. Human recruiters still made the decisions in all conditions, based on interview performance and a standardized test, which makes the outcomes business realistic.

Top line results

- Job offers increased by roughly 12% when interviews were conducted by the AI voice agent rather than by humans

- Job starts rose about 18%, again in favor of AI led interviews

- Thirty day retention increased around 17% for the AI path, suggesting the hires were not only faster to start but also more likely to stick through the first month

- When offered a choice, 78% of applicants chose the AI interviewer. Applicants accepted offers at similar rates and rated the experience, and the recruiters, similarly across conditions. In short, the AI interview did not degrade candidate satisfaction.

One more striking detail. Before seeing the results, most recruiters expected AI led interviews to perform worse on quality and retention. The forecast bias was clear, and the experiment overturned it.

Why this matters for us

If the first round can be structured and AI-assisted without hurting signal, we reclaim hours that usually disappear into phone-screening every applicant—and we can finally screen everyone without talking to everyone. That time can shift to better downstream interviews, feedback, and onboarding. For small and mid sized teams, this is the difference between reactive hiring and a measured, repeatable process.

Why AI interviews performed better

The authors did not stop at outcomes. They analyzed interview transcripts to unpack what AI interviewers actually collected from candidates. The short version: AI elicited more hiring relevant information. Interviews run by the voice agent covered more topics, had more complete exchanges, and produced content that better predicted offer decisions.

Two empirical layers stand out:

- Content that recruiters care about predicts hiring

Recruiters’ numeric interview ratings and their free text assessments both strongly predicted offers. These are not vanity scores. They correlated tightly with final decisions even after controlling for test scores. - Transcript quality matters

Interviews that were comprehensive and covered more core topics were more likely to result in offers. The team constructed linguistic features like vocabulary richness, syntactic complexity, discourse markers, filler words, backchannel cues, the number of exchanges, and style matching. The more a session looked like a complete, well probed interview, the stronger its predictive power.

The mechanism is intuitive. AI interviewers follow the script, probe consistently, and do not tire. That yields denser, cleaner signal for human decision makers. In the study, recruiters actually scored AI interviewed candidates higher, then put slightly more weight on standardized tests when forming decisions, which suggests AI raised the baseline interview quality and encouraged a balanced view of hard and soft indicators.

What about attrition quality? Among those who left within 30 days, the reasons for leaving looked the same across AI and human paths, which implies the retention lift comes from better initial matches rather than a quirky funnel effect.

Candidate experience is not the blocker many expect

We often hear the worry that AI interviews feel impersonal or that candidates will opt out. The data says otherwise. Given the option, a large majority chose AI, and experience ratings were similar whether the interviewer was human or AI. Offer acceptance rates did not suffer either. For busy teams that need high response rates, this is an important myth to retire.

A nuance to watch: candidates with lower standardized test scores were more likely to select AI when given the choice. That makes choice architecture a design lever. We can offer both modes, but we should route everyone through the same evaluation rubric and keep the decision line with humans.

Where AI interviews can go wrong

The risk is not the interview. It is over automation of the decision. The study kept humans in charge, and so should we. In regulated markets, a system that auto rejects or issues a blanket “not recommended” label creates compliance exposure and trust issues. A safer posture is to summarize, score criteria, rank, and leave the decision to recruiters. That is also our product stance. We generate match scores and structured summaries, yet we do not issue a final hire or no hire directive. That balance preserves human judgment and aligns with emerging guidance.

We also need to mind design details that shape outcomes:

- Interview length and retake limits

- Question clarity and job relevance

- Clear, fair scoring rubrics that tie to the job description

These details determine whether the AI interviewer collects comprehensive, predictive signal or just long transcripts no one will use.

A practical setup we can run this quarter

We do not need to rebuild our stack. A lightweight workflow gets us most of the gains the study observed.

1) Define the match logic before we invite anyone

Write the job description and a short intake that states what success looks like in 6 to 12 months and the traits that separate strong from average hires. This intake powers the criteria and makes the summaries useful rather than generic.

2) Use a short bank of structured first round questions

Aim for five to eight questions that probe motivation, role understanding, problem solving, and job specific scenarios. Keep answers short, set retake rules, and make the time visible to the candidate. The goal is full topic coverage, not trick questions.

3) Let AI summarize the interview, then give your team ranked signal

Recruiters should open a single view that shows a candidate summary, the match score, strength and challenge themes, and transcripts per question. That is the fast path to the top five out of eighty.

4) Keep the decision human, visible, and auditable

Record evaluator notes or quick dispositions like advance, pass, or hold. Do not auto reject. If we need a tiebreaker, use standardized tests or work samples to triangulate.

5) Track downstream outcomes

Monitor job starts and 30 day retention by path. If the field experiment is any guide, consistently structured and complete first rounds can improve the signal quality available to decision-makers.

6) Offer a choice only if we can keep evaluation constant

If you let candidates pick, keep the rubric identical, and route everyone to the same reviewer pool. Choice does not hurt by itself, but it should not create parallel standards.

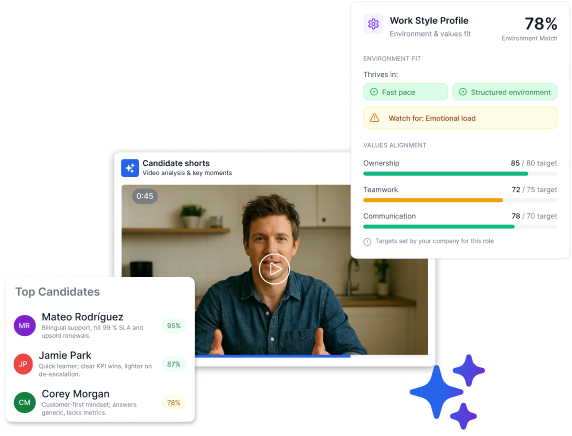

How this maps to Truffle’s approach

We built Truffle as an AI-assisted screening platform that surfaces signal across multiple dimensions—so you can screen every applicant without phone-screening all of them. • Job-specific matching using your description and a short intake, which tunes criteria to the role rather than using a one-size score • Async video interviews with time limits and retake controls that keep sessions real and repeatable • AI-resistant assessments (Personality, Situational Judgment, Environment Fit) that measure what AI can't fake—personality tendencies, situational approach, and work environment preferences • AI summaries per question plus a rolled-up candidate view, including transcripts for transparency and calibration • Ranked candidate lists that consistently surface the top cohort, so you spend time where it counts and avoid resume roulette • Human-in-the-loop decisions that respect compliance and keep judgment with your team. We do not output a final hire verdict. We provide structured evidence and a defensible short list.

- Job specific matching using our description and a short intake, which tunes criteria to the role rather than using a one size score

- Audio or video screeners with time limits and retake controls that keep interviews real and repeatable

- AI summaries per question plus a rolled up candidate view, including transcripts for transparency and calibration

- Ranked candidate lists that consistently surface the top cohort, so you spend time where it counts and avoid resume roulette

- Human in the loop decisions that respect compliance and keep judgment with our team. We do not output a final hire verdict. We provide structured evidence and a defensible short list.

This design mirrors the study’s guardrails. AI facilitates the interview and organizes the signal. Humans review and select.

Design choices that lift signal without lifting risk

Based on the evidence and our customer rollouts, a few choices pay off quickly.

Ask scenario questions that require judgment

We want language that elicits causal reasoning and sequencing. That is where differences show up in vocabulary richness, syntactic complexity, and discourse markers, which the study found predictive. Keep the prompts real to the job.

Push for complete topic coverage

If an interview does not touch key areas like role understanding, motivation, problem solving, collaboration, and customer handling, our signal is thin. The AI interviewer should probe until the core set is covered, then close. Comprehensive interviews predicted offers better than partial ones.

Tie evaluation to both interview and objective tests

The uplift in starts and retention came with humans balancing rich interviews and standardized tests. Keep that balance. It reduces the risk of over indexing on any single dimension.

Be transparent with candidates

If we use AI to run the interview, say so, and explain why. The study shows candidates are comfortable, and many prefer it, but clarity builds trust.

What results should we expect

No two funnels are the same, but the field experiment gives us a baseline. If we replace unstructured, human-led first rounds with AI-assisted, structured interviews, while keeping humans in charge of decisions, the study suggests we may see:

- More completed, comparable interviews in less time

- Changes in how candidates move through the funnel

- Different patterns in early retention

The study's unconditional lifts—roughly 12 percent more offers, 18 percent more starts, and 17 percent better 30-day retention for the AI path—offer orientation points, but your absolute numbers will depend on role, volume, and process design.

The study’s unconditional lifts are the right orientation points: roughly 12 percent more offers, 18 percent more starts, and 17 percent better 30 day retention for the AI path. Our absolute numbers will vary, but the direction is consistent when the interview is complete and criteria based.

The business impact that follows

Hiring is the bottleneck behind most operating bottlenecks. If we can move the first round from a manual scheduling treadmill to a consistent, AI led interview that yields stronger signal, we unlock three compounding effects.

Speed protects revenue

Fewer days open means fewer days understaffed. When offer and start rates rise, time to productivity accelerates.

Quality reduces rework

Early retention is a blunt measure, yet it correlates with better fit and less churn work for managers. We can move that metric without adding friction.

Fairness and transparency scale with us

Structured probing and transcripts give us a clean audit trail. Humans still make the choice, and we can defend why, which matters to candidates and regulators alike.

The signal is clear. AI can run the first round, and when designed well, it improves outcomes that matter to the business. Our job is to set the rules, keep the decision human, and let the system do what humans are bad at doing consistently, which is asking the same good questions the same way every time.

If we do that, we win back time and ship better hires. The data is finally on the table. Now it is a process choice.

The TL;DR

If we have ever wondered whether AI can run a first round interview without hurting quality or candidate experience, we finally have large scale evidence. A new natural field experiment with 70,000 applicants compared human recruiters with AI voice interviewers and found that AI lifted job offers, job starts, and 30 day retention while keeping satisfaction steady. In plain terms, the study found that structured, AI-assisted interviews surfaced stronger signal for human decision-makers without degrading candidate experience.

Below we translate the study into practical playbooks for lean teams. We highlight where AI interviews shine, where they can fail if left on autopilot, and how to build a simple, defensible workflow that gives hiring managers stronger signal with less effort.

The research at a glance

The study is a large natural field experiment, not a lab simulation. Applicants were randomly assigned to one of three paths: interviewed by a human, interviewed by an AI voice agent, or given a choice between the two. Human recruiters still made the decisions in all conditions, based on interview performance and a standardized test, which makes the outcomes business realistic.

Top line results

- Job offers increased by roughly 12% when interviews were conducted by the AI voice agent rather than by humans

- Job starts rose about 18%, again in favor of AI led interviews

- Thirty day retention increased around 17% for the AI path, suggesting the hires were not only faster to start but also more likely to stick through the first month

- When offered a choice, 78% of applicants chose the AI interviewer. Applicants accepted offers at similar rates and rated the experience, and the recruiters, similarly across conditions. In short, the AI interview did not degrade candidate satisfaction.

One more striking detail. Before seeing the results, most recruiters expected AI led interviews to perform worse on quality and retention. The forecast bias was clear, and the experiment overturned it.

Why this matters for us

If the first round can be structured and AI-assisted without hurting signal, we reclaim hours that usually disappear into phone-screening every applicant—and we can finally screen everyone without talking to everyone. That time can shift to better downstream interviews, feedback, and onboarding. For small and mid sized teams, this is the difference between reactive hiring and a measured, repeatable process.

Why AI interviews performed better

The authors did not stop at outcomes. They analyzed interview transcripts to unpack what AI interviewers actually collected from candidates. The short version: AI elicited more hiring relevant information. Interviews run by the voice agent covered more topics, had more complete exchanges, and produced content that better predicted offer decisions.

Two empirical layers stand out:

- Content that recruiters care about predicts hiring

Recruiters’ numeric interview ratings and their free text assessments both strongly predicted offers. These are not vanity scores. They correlated tightly with final decisions even after controlling for test scores. - Transcript quality matters

Interviews that were comprehensive and covered more core topics were more likely to result in offers. The team constructed linguistic features like vocabulary richness, syntactic complexity, discourse markers, filler words, backchannel cues, the number of exchanges, and style matching. The more a session looked like a complete, well probed interview, the stronger its predictive power.

The mechanism is intuitive. AI interviewers follow the script, probe consistently, and do not tire. That yields denser, cleaner signal for human decision makers. In the study, recruiters actually scored AI interviewed candidates higher, then put slightly more weight on standardized tests when forming decisions, which suggests AI raised the baseline interview quality and encouraged a balanced view of hard and soft indicators.

What about attrition quality? Among those who left within 30 days, the reasons for leaving looked the same across AI and human paths, which implies the retention lift comes from better initial matches rather than a quirky funnel effect.

Candidate experience is not the blocker many expect

We often hear the worry that AI interviews feel impersonal or that candidates will opt out. The data says otherwise. Given the option, a large majority chose AI, and experience ratings were similar whether the interviewer was human or AI. Offer acceptance rates did not suffer either. For busy teams that need high response rates, this is an important myth to retire.

A nuance to watch: candidates with lower standardized test scores were more likely to select AI when given the choice. That makes choice architecture a design lever. We can offer both modes, but we should route everyone through the same evaluation rubric and keep the decision line with humans.

Where AI interviews can go wrong

The risk is not the interview. It is over automation of the decision. The study kept humans in charge, and so should we. In regulated markets, a system that auto rejects or issues a blanket “not recommended” label creates compliance exposure and trust issues. A safer posture is to summarize, score criteria, rank, and leave the decision to recruiters. That is also our product stance. We generate match scores and structured summaries, yet we do not issue a final hire or no hire directive. That balance preserves human judgment and aligns with emerging guidance.

We also need to mind design details that shape outcomes:

- Interview length and retake limits

- Question clarity and job relevance

- Clear, fair scoring rubrics that tie to the job description

These details determine whether the AI interviewer collects comprehensive, predictive signal or just long transcripts no one will use.

A practical setup we can run this quarter

We do not need to rebuild our stack. A lightweight workflow gets us most of the gains the study observed.

1) Define the match logic before we invite anyone

Write the job description and a short intake that states what success looks like in 6 to 12 months and the traits that separate strong from average hires. This intake powers the criteria and makes the summaries useful rather than generic.

2) Use a short bank of structured first round questions

Aim for five to eight questions that probe motivation, role understanding, problem solving, and job specific scenarios. Keep answers short, set retake rules, and make the time visible to the candidate. The goal is full topic coverage, not trick questions.

3) Let AI summarize the interview, then give your team ranked signal

Recruiters should open a single view that shows a candidate summary, the match score, strength and challenge themes, and transcripts per question. That is the fast path to the top five out of eighty.

4) Keep the decision human, visible, and auditable

Record evaluator notes or quick dispositions like advance, pass, or hold. Do not auto reject. If we need a tiebreaker, use standardized tests or work samples to triangulate.

5) Track downstream outcomes

Monitor job starts and 30 day retention by path. If the field experiment is any guide, consistently structured and complete first rounds can improve the signal quality available to decision-makers.

6) Offer a choice only if we can keep evaluation constant

If you let candidates pick, keep the rubric identical, and route everyone to the same reviewer pool. Choice does not hurt by itself, but it should not create parallel standards.

How this maps to Truffle’s approach

We built Truffle as an AI-assisted screening platform that surfaces signal across multiple dimensions—so you can screen every applicant without phone-screening all of them. • Job-specific matching using your description and a short intake, which tunes criteria to the role rather than using a one-size score • Async video interviews with time limits and retake controls that keep sessions real and repeatable • AI-resistant assessments (Personality, Situational Judgment, Environment Fit) that measure what AI can't fake—personality tendencies, situational approach, and work environment preferences • AI summaries per question plus a rolled-up candidate view, including transcripts for transparency and calibration • Ranked candidate lists that consistently surface the top cohort, so you spend time where it counts and avoid resume roulette • Human-in-the-loop decisions that respect compliance and keep judgment with your team. We do not output a final hire verdict. We provide structured evidence and a defensible short list.

- Job specific matching using our description and a short intake, which tunes criteria to the role rather than using a one size score

- Audio or video screeners with time limits and retake controls that keep interviews real and repeatable

- AI summaries per question plus a rolled up candidate view, including transcripts for transparency and calibration

- Ranked candidate lists that consistently surface the top cohort, so you spend time where it counts and avoid resume roulette

- Human in the loop decisions that respect compliance and keep judgment with our team. We do not output a final hire verdict. We provide structured evidence and a defensible short list.

This design mirrors the study’s guardrails. AI facilitates the interview and organizes the signal. Humans review and select.

Design choices that lift signal without lifting risk

Based on the evidence and our customer rollouts, a few choices pay off quickly.

Ask scenario questions that require judgment

We want language that elicits causal reasoning and sequencing. That is where differences show up in vocabulary richness, syntactic complexity, and discourse markers, which the study found predictive. Keep the prompts real to the job.

Push for complete topic coverage

If an interview does not touch key areas like role understanding, motivation, problem solving, collaboration, and customer handling, our signal is thin. The AI interviewer should probe until the core set is covered, then close. Comprehensive interviews predicted offers better than partial ones.

Tie evaluation to both interview and objective tests

The uplift in starts and retention came with humans balancing rich interviews and standardized tests. Keep that balance. It reduces the risk of over indexing on any single dimension.

Be transparent with candidates

If we use AI to run the interview, say so, and explain why. The study shows candidates are comfortable, and many prefer it, but clarity builds trust.

What results should we expect

No two funnels are the same, but the field experiment gives us a baseline. If we replace unstructured, human-led first rounds with AI-assisted, structured interviews, while keeping humans in charge of decisions, the study suggests we may see:

- More completed, comparable interviews in less time

- Changes in how candidates move through the funnel

- Different patterns in early retention

The study's unconditional lifts—roughly 12 percent more offers, 18 percent more starts, and 17 percent better 30-day retention for the AI path—offer orientation points, but your absolute numbers will depend on role, volume, and process design.

The study’s unconditional lifts are the right orientation points: roughly 12 percent more offers, 18 percent more starts, and 17 percent better 30 day retention for the AI path. Our absolute numbers will vary, but the direction is consistent when the interview is complete and criteria based.

The business impact that follows

Hiring is the bottleneck behind most operating bottlenecks. If we can move the first round from a manual scheduling treadmill to a consistent, AI led interview that yields stronger signal, we unlock three compounding effects.

Speed protects revenue

Fewer days open means fewer days understaffed. When offer and start rates rise, time to productivity accelerates.

Quality reduces rework

Early retention is a blunt measure, yet it correlates with better fit and less churn work for managers. We can move that metric without adding friction.

Fairness and transparency scale with us

Structured probing and transcripts give us a clean audit trail. Humans still make the choice, and we can defend why, which matters to candidates and regulators alike.

The signal is clear. AI can run the first round, and when designed well, it improves outcomes that matter to the business. Our job is to set the rules, keep the decision human, and let the system do what humans are bad at doing consistently, which is asking the same good questions the same way every time.

If we do that, we win back time and ship better hires. The data is finally on the table. Now it is a process choice.

Try Truffle instead.