Start screening smarter today

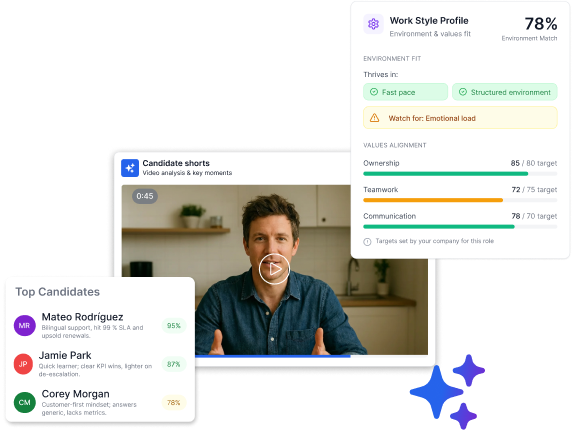

Try Truffle free for 7 days—no credit card required. See how AI-powered video interviews help you shortlist top candidates in minutes, not days.

Start free trial

Start for free

Evaluating talent is a high-leverage leadership skill—yet many organizations still rely on unstructured interviews, vague “values alignment” instincts, and inconsistent performance signals.

That approach can lead to mis-hires, inequitable decisions, and unclear growth pathways for internal talent. This assessment is designed to help you build a more consistent, repeatable approach to evaluating talent across both hiring and internal mobility (promotions, succession, lateral moves, and development planning).

Unlike tool roundups or generic “best practices” lists, this assessment checks whether you can apply research-informed evaluation practices: define what to measure (skills, behaviors, potential, values), choose appropriate methods, score consistently using anchored rubrics, run calibration to improve reliability, and monitor outcomes to understand what’s working over time. It draws on principles used in structured hiring, job analysis, competency modeling, and responsible measurement.

This is for recruiters, hiring managers, HR/People leaders, and L&D partners who want clearer decision criteria, fairer processes, and faster ramp time—without adding unnecessary process. If you’re building structured interviews, improving performance and promotion decisions, or launching skills-based internal mobility, you’ll find practical templates, scoring guidance, and role-specific scenarios.Take the assessment end-to-end to identify your current level, pinpoint breakdowns in your process, and leave with a roadmap you can implement immediately—whether you’re operating with a lean startup hiring loop or an enterprise-grade governance model.

Evaluating talent is not a single skill—it’s an operating system that combines decision quality, job-relevant measurement, and disciplined execution. Many teams struggle in one of three places:

This assessment measures your ability to design and run a complete talent evaluation loop that supports:

This assessment uses a single model that can be applied consistently across hiring and internal decisions.

S — Skills (Can they do the work?)

B — Behaviors (How do they work?)

P — Potential (How much can they grow into?)

V — Values / Culture Add (How do they strengthen the system?)

Key distinction: Skills and behaviors are primarily current-state; potential is future-state; values are guardrails.

Choose methods based on role risk, seniority, and the type of signal you need:

This assessment references commonly used concepts in selection and performance evaluation:

If your process can clearly explain what you measured, how you measured it, and why it is job-relevant, it’s easier to scale and audit.

This is a practical, scenario-based assessment with scoring across five capability domains. You’ll answer 9 scenarios. Each scenario maps to one or more domains.

Job analysis, must-have vs. trainable, proficiency levels, success metrics

Choosing higher-signal methods; sequencing; minimizing noise; feasibility

Scorecard criteria, BARS anchors, weighting, evidence standards

Debrief discipline, decision rules, documentation, feedback quality

Bias mitigation mechanics, accommodations, adverse impact monitoring, KPI loop

For each scenario, your answer should explicitly state:

Each scenario is rated on a 0–4 scale:

Each scenario has a weight aligned to the domains.

Total score = sum of weighted scenario scores → 0–100.

Use these thresholds to set your own team standards:

Use these as both an assessment and a training tool for your interviewers/managers.

You’re hiring a Customer Success Manager (mid-level). The hiring manager says: “We need someone proactive who can manage accounts and reduce churn.”

Prompt: Create a one-page success profile: 6–8 criteria maximum. Label each as must-have or trainable. Define what “strong evidence” looks like.

What strong answers include (scoring hints):

You’re hiring Account Executives with high applicant volume. You have 10 days to fill roles and can’t add more than 2.5 hours of interview time per candidate.

Prompt: Build a minimum viable assessment plan (stages + methods) that balances speed and signal. Include what each stage measures (SBPV).

Watch-outs:

Design a scorecard for a Software Engineer (backend) onsite/virtual loop.

Prompt: Provide 5–6 criteria with BARS anchors for one criterion (e.g., “system design”). Include rating definitions for 1, 3, 5 on a 5-point scale.

High-quality anchor example (what we’re looking for):

You have two internal candidates for Team Lead.

Prompt: Define how you would evaluate readiness vs. potential vs. role behaviors. What evidence would you require before promoting?

Common failure: promoting the strongest IC without validating leadership behaviors.

Panel feedback on a candidate is split:

Prompt: Write a 20-minute calibration agenda and decision rule. How do you resolve conflict without politics?

Strong answers include:

Your hiring funnel shows a drop-off for one demographic group at the take-home assignment stage.

Prompt: List 5 actions you would take in the next 30 days to diagnose and reduce adverse impact while preserving job-relevance.

High-signal actions:

A candidate was rejected after the final stage and asks for feedback.

Prompt: Provide a feedback note aligned to the scorecard that is specific, respectful, and avoids unnecessary legal risk.

What “good” looks like:

You’re launching an internal mobility program. Employees want to move into Operations Analyst roles.

Prompt: Propose a lightweight skills evaluation process and how you’ll map results to learning plans. Include how you’ll prevent managers from blocking moves.

Strong answers include:

Leadership says: “We’ll invest in structured evaluation if you can show it’s working.”

Prompt: Define a KPI dashboard for evaluating talent. Include leading and lagging indicators, review cadence, and how you’ll use results to iterate.

Recommended KPIs:

Profile: Decisions rely on gut feel, résumé signals, and unstructured interviews. Scorecards are absent or decorative.

Impact: Higher mis-hire risk, inconsistent internal decisions, greater bias exposure.

Actionable focus (next 30 days):

Profile: Some structure exists (common questions, basic rubric) but weak anchors and inconsistent calibration.

Impact: Improvements show up sporadically; interviewer variance remains high.

Actionable focus:

Profile: Strong success profiles, job-relevant methods, consistent scoring, and disciplined debriefs.

Impact: Higher signal-to-noise, better hiring manager confidence, more defensible promotion decisions.

Actionable focus:

Profile: You operate an end-to-end system across hiring and internal mobility, with governance, measurement, and continuous improvement.

Impact: Compounding gains in quality of hire, internal fills, retention, and fairness; scalable across job families.

Actionable focus:

Week 1: Conduct a role intake and write a success profile.

Week 2: Create a scorecard with 6 criteria and a 1–5 rating scale.

Week 3: Convert 6 interview questions into structured behavioral questions tied to criteria.

Week 4: Run a structured debrief and capture evidence in a consistent template.

Deliverable: one role with a complete, repeatable evaluation packet.

Add BARS anchors for 3 highest-impact criteria.

Implement interviewer training: evidence vs. inference, note-taking standards, bias interrupts.

Introduce calibration: compare rating distributions; resolve rubric drift.

Deliverable: documented rubrics + trained panel + debrief discipline.

Standardize method selection by role family (SWE, Sales, CS, Ops, People Manager).

Create a KPI dashboard: ramp time, retention, pass-through rates.

Establish a quarterly audit cadence and iteration loop.

Deliverable: scaled templates + measurement supporting continuous improvement.

Align hiring and internal evaluation criteria to a shared competency/skills architecture.

Create internal mobility pathways with transparent proficiency expectations.

Build an adverse impact monitoring and remediation workflow.

Deliverable: unified “Talent Evaluation OS” adopted across the organization.

Benchmarks vary by industry and maturity, but these are widely used targets to anchor improvement:

Reduce interviewer “spread” (variance) over time through anchors and training.

Track how often debriefs reference evidence vs. impressions.

Role: ____ Level: ______

Must-meet criteria (gate):

Differentiators:

3) ____ (Skills/Behaviors/Potential) Weight: __

4) ______ Weight: __

Values / Culture add (non-negotiables defined):

5) ________ Weight: __

Rating scale (example): 1 Below / 2 Mixed / 3 Meets / 4 Strong / 5 Exceptional

Evidence requirement:

Work Rules! (Laszlo Bock) — practical hiring system insights (use critically)

The Manager’s Path (Camille Fournier) — evaluating engineering growth and leadership

Thinking, Fast and Slow (Daniel Kahneman) — decision biases relevant to evaluation

Structured interviewing and inclusive hiring training (internal L&D or reputable providers)

People analytics fundamentals (to build measurement literacy)

If you adopt software, prioritize features that encourage consistent evaluation behavior:

Position yourself as the owner of selection quality (not just time-to-fill).

Bring a quarterly narrative: “Our evaluation changes are helping us reduce ramp time and improve early performance signals.”

Build enablement: interviewer certification, rubric libraries, calibration governance.

Treat success profiles and scorecards as a management tool: they clarify expectations post-hire.

Use the same criteria for onboarding and 30/60/90 plans (tight feedback loop).

Reduce team churn by making promotion readiness evidence-based.

Unify hiring competencies with performance and internal mobility frameworks.

Build skills-based pathways: clear proficiency, transparent movement rules, development plans.

Lead fairness audits with practical remediation (not just policy).

Evaluating talent at a high level means you can:

Use the scenarios above as your ongoing practice set. If you want to operationalize this quickly, convert your next open role into a complete evaluation packet: success profile, method plan, scorecard with anchors, debrief agenda, and a simple KPI dashboard that closes the loop.