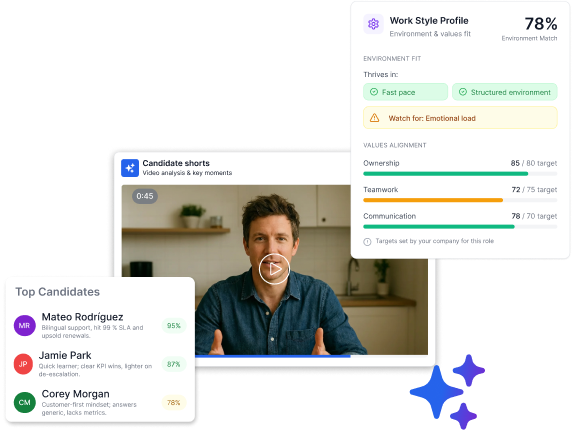

Start screening smarter today

Try Truffle free for 7 days—no credit card required. See how AI-powered video interviews help you shortlist top candidates in minutes, not days.

Start free trial

Start for free

An associate product management test is designed to answer one question quickly and consistently: can you handle the core work an APM is likely to do—using sound judgment, structured thinking, and clear communication—without needing years of prior product ownership?

This assessment package is built specifically for Associate Product Manager (APM) hiring and practice. It reflects formats candidates commonly face—timed multiple-choice, scenario-based judgment questions, metrics interpretation, prioritization, and a mini writing/PRD-style prompt—organized into a transparent set of focus areas. Every sample question is tagged to an area, and the scoring model shows what “strong” tends to look like at the associate level.If you’re a candidate, you’ll get realistic practice plus rationales, common pitfalls, and a focused 7-day and 14-day roadmap to improve your results.

If you’re hiring, you’ll get a structured blueprint: definitions for each area, suggested time limits, optional score-band interpretation, and follow-up prompts to validate results in interviews.Use this page as a self-contained simulator: review the focus areas, take the sample set under time pressure, score yourself by area, and then follow the remediation plan tied to your weakest domain. That’s how you turn “I want an APM role” into clearer, job-relevant signal.

Many popular assessment pages are not calibrated to what an Associate Product Manager is typically expected to do.

Common gaps include:

This package addresses those gaps with an APM-specific structure, a multi-format sample test, and an easy-to-apply scoring model.

An APM is often evaluated on execution-ready product thinking—not long-horizon strategy ownership.

This assessment focuses on seven practical areas that show up repeatedly in APM interview loops:

This package uses a blended approach commonly used in skills-based hiring and practice:

Section A — Timed MCQ + scenario items (25–35 minutes):

20–25 items

Section B — Metrics & prioritization mini-case (20 minutes):

4–6 prompts

Section C — Mini-PRD / writing prompt (15–30 minutes):

1 prompt

For candidates: simulate the real thing by setting a timer and writing your reasoning (not just answers).

Below are 10 realistic sample items. Use them as a mini practice set.

Scenario: You’re improving a campus food delivery app. Students complain: “It takes too long to reorder my usual meal.”

Question (MCQ): What’s the best MVP to test impact on reorder time?

A. Add AI meal recommendations across the app

B. Add a “Reorder last order” button on order history

C. Build a full subscription plan with scheduled deliveries

D. Redesign the entire checkout flow UI

Correct answer: B

Rationale: B targets the stated friction with minimal scope and fast learning. A and C are higher-risk expansions. D might help but is broad; start with a focused lever.

Question (MCQ): Which interview question is least leading?

A. “Wouldn’t you agree the new onboarding is confusing?”

B. “How much do you like our new onboarding screens?”

C. “Walk me through the last time you signed up—what stood out as easy or hard?”

D. “Do you prefer onboarding with tips or tutorials?”

Correct answer: C

Rationale: C prompts concrete recall and leaves room for positives/negatives. A and B bias sentiment; D constrains options prematurely.

Scenario: You have 6 backlog items spanning bug fixes, a growth experiment, and a compliance request.

Question (MCQ): When is MoSCoW more appropriate than RICE?

A. When you have reliable reach/impact estimates

B. When a hard deadline (e.g., compliance) creates must-do constraints

C. When you’re optimizing only for revenue

D. When you want to avoid stakeholder input

Correct answer: B

Rationale: MoSCoW is useful when constraints define “musts” vs. “shoulds.” RICE is better when you can estimate reach/impact/confidence/effort.

Question (MCQ): Which is most likely a north star metric for a consumer language-learning app?

A. Number of push notifications sent

B. Daily active learners completing a lesson

C. Number of A/B tests run per month

D. Total customer support tickets

Correct answer: B

Rationale: A north star metric captures delivered user value at scale. Push volume and test count are activity metrics; support tickets are typically a cost/quality indicator.

Scenario:

Signup → Activation → First Lesson → Day-7 Retention

Question (MCQ): Best next step?

A. Increase acquisition spend to offset retention

B. Investigate activation step changes and segment by device/version

C. Redesign lesson content immediately

D. Remove the activation step entirely without analysis

Correct answer: B

Rationale: The first visible drop is activation. Segmenting by device/version/time helps isolate a release or channel mix effect.

Question (MCQ): Which hypothesis is most testable?

A. “Users will love a cleaner homepage.”

B. “A cleaner homepage will increase engagement.”

C. “If we reduce homepage options from 12 to 6, then click-through to key actions will increase by 5% without reducing conversion.”

D. “Our competitor’s homepage is better.”

Correct answer: C

Rationale: C specifies the change, metric, expected direction/magnitude, and a guardrail.

Question (MCQ): Which acceptance criteria is best?

User story: “As a user, I want to export my activity so I can share it.”

A. “Export should be easy to use.”

B. “Export must be implemented by Friday.”

C. “User can export last 30 days to CSV from settings; file includes date, activity type, duration; export completes in <10 seconds for 95% of users.”

D. “Export should use modern design patterns.”

Correct answer: C

Rationale: C is specific, testable, and includes performance expectations.

Scenario: Engineering says a feature will take 6 weeks; Sales promises it in 2 weeks.

Question (SJT-style): Best APM response?

A. Tell Sales they shouldn’t promise features

B. Ask Engineering to “try harder” and work nights

C. Align on the customer impact, explore a reduced-scope MVP, and communicate trade-offs with a revised commitment

D. Escalate immediately to the CEO

Correct answer: C

Rationale: C manages expectations with scope options and evidence.

Scenario:

Option 1: reduces churn by 0.5% (on 200k users).

Option 2: increases conversion by 1% (on 50k signups/month).

Assume average monthly revenue per active user = $10, and conversion creates an active user.

Question (MCQ): Which option likely yields higher monthly impact (directionally)?

A. Option 1

B. Option 2

C. They’re equal

D. Not enough info to estimate directionally

Correct answer: A

Rationale:

Option 1 retains 1,000 users → ~$10k/month.

Option 2 adds 500 users → ~$5k/month.

Prompt (short answer):

Write a 5-sentence status update to leadership after an experiment increased click-through by 7% but decreased purchase conversion by 2%.

Strong answer characteristics (rubric-based):

Use this in Section B.

You’re the APM for a streaming app. After a new onboarding release, the dashboard shows:

MCQ/SJT-style items:

1 point each (no penalty). Convert to % by area.

Mini-case prompts:

0–4 each using a rubric (below). Convert to %.

Writing prompt:

0–10 using the writing rubric. Convert to %.

Final score:

sum(weight × area %).

0 — Off-target:

Doesn’t address the prompt or misunderstands data.

1 — Surface:

Names a plausible idea but no rationale or next step.

2 — Competent:

Correct direction, basic reasoning, misses a key constraint.

3 — Strong:

Clear logic, prioritizes, identifies risks/assumptions.

4 — Excellent:

Structured, data-aware, proposes fast validation and crisp metrics.

Use these as practice indicators, not a definitive judgment.

These are starting points only. Validate internally, document job relevance, and avoid using a single score as the sole hiring decision.

Track on-the-job outcomes vs. assessment results over time to calibrate your bands and reduce false positives/negatives.

Daily (30 min):

3x/week (45 min):

Weekly (60 min):

Deliverable to create:

a small portfolio (2 PRD outlines + 2 experiment plans + 2 metric diagnoses).

Product thinking & customer value

Harvard DCE PM guidance (customer insight, strategy, communication foundations)

Structured interviewing & scorecards

LinkedIn Talent Blog guidance on structured interviews and interviewer scorecards

Skills-based hiring & assessment design

SHRM guidance on skills-based hiring, assessment design, and job-related testing

Analytics: Amplitude or Mixpanel demo datasets (funnels, retention)

Delivery: Jira (user stories, acceptance criteria), Notion/Confluence (PRDs)

Experimentation: simple A/B test readout templates (metrics + guardrails)

Product sense:

“What user segment are we not serving, and what’s the smallest test to learn?”

Analytics:

“A KPI drops 8% week-over-week—walk me through your first 30 minutes.”

Experimentation:

“Define success and guardrails for this test. What would make you stop it early?”

Execution:

“Turn this feature into a user story with acceptance criteria and edge cases.”

Stakeholders:

“Two teams disagree on priority—how do you align without authority?”

This is how you turn an associate product management test from a generic quiz into a more structured, job-relevant practice and hiring input—for both candidates and hiring teams.