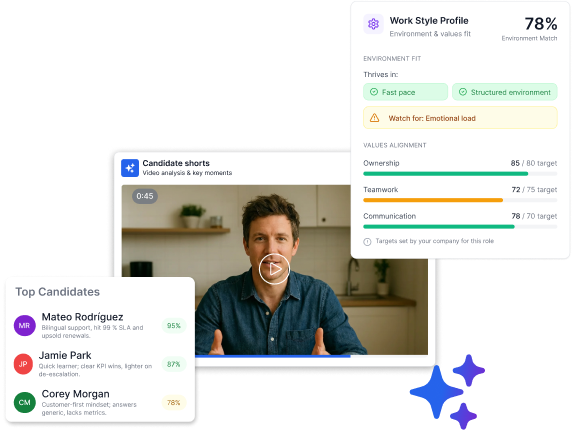

Start screening smarter today

Try Truffle free for 7 days—no credit card required. See how AI-powered video interviews help you shortlist top candidates in minutes, not days.

Start free trial

Start for free

“Design assessment” is one of those overloaded terms that causes bad search results—and worse real-world decisions.

Some people mean assessment design for learning (courses, training, critique-based studios). Others mean a design skills assessment for hiring (UX, product, graphic, service design).

This page solves the ambiguity by giving you two clear pathways—without forcing you to stitch together advice from academic guides, Reddit threads, and pre-employment testing vendors.

You’ll get a practical, standards-informed assessment package: a repeatable framework, job- and outcomes-aligned task formats, ready-to-adapt rubrics with scoring anchors, and quality controls that many “best practices” pages skip—validity, reliability (including inter-rater calibration), bias and accessibility audits, and integrity-by-design for remote/AI-enabled environments.If you’re a hiring manager, design leader, educator, L&D partner, or senior practitioner who needs assessments that are fair, transparent, and consistent, this is built for you.

It’s also designed for ambitious professionals: use the sample scenarios and scoring to self-assess, identify gaps, and turn results into a credible growth plan.Most assessments fail because they test the wrong thing (low validity), score inconsistently (low reliability), or disadvantage capable people through avoidable ambiguity and accessibility barriers. The goal here is simple: help you create a design assessment that people trust—because it measures role-relevant work, scores consistently, respects candidates/learners, and provides actionable insight to support better decisions.

(and how it connects to real work)

A strong design assessment does not measure “taste” or tool tricks.

It focuses on role-relevant competencies and produces structured evidence you can evaluate consistently.

This assessment package covers 9 skill domains — usable for:

Each domain is written as a competency evidenced through observable work.

Problem framing & outcomes alignment

Defines the problem, success metrics, constraints, and stakeholders. Aligns work to outcomes (learning or business).

Research & insight quality

Chooses appropriate methods, avoids leading questions, synthesizes into actionable insights.

Concept generation & exploration

Produces multiple viable directions, avoids premature convergence, documents rationale.

Systems thinking & consistency

Creates coherent patterns, reusable components, predictable behaviors.

Craft & execution

Visual hierarchy, typography, layout, interaction clarity, attention to detail.

Communication & critique

Explains decisions, receives feedback, iterates, writes and presents clearly.

Collaboration & stakeholder management

Negotiates tradeoffs, manages expectations, integrates cross-functional input.

Ethics, accessibility & inclusion

Applies inclusive design practices (contrast, semantics, cognitive load), anticipates harm and bias risks.

Process integrity in modern contexts

Works effectively in remote/asynchronous settings, documents process, uses AI responsibly with disclosure.

To ensure consistency and transparency, the package uses widely recognized assessment principles:

Why this matters:

Many resources talk about authenticity but skip practical mechanics like:

(two clear pathways)

The core framework stays the same. Only the stakes and task formats change.

Use when your goal is:

Growth, feedback, progression, evidence of mastery

Best-fit formats

Use when your goal is:

Structured comparison, consistent evidence, role relevance

Best-fit formats

Ethical note

Hiring assessments should:

(6 repeatable steps)

Treat assessment creation like product design:

Requirements → Prototype → Test → Iterate

Write 3–7 measurable competencies.

Examples

Quality check

If you can’t explain how it shows up in real work, don’t assess it.

What would actually convince you?

Evidence types

Quality check

Evidence must be observable and scorable.

Match reality and constraints:

Use an analytic rubric with 4 performance levels.

Standardize:

Run an Assessment QA review:

(9 realistic scenarios)

Use as a question bank.

For learning → graded or formative

For hiring → select 2–3 and time-box

Scenario: Redesign onboarding to reduce drop-off (no data provided)

Prompt

Covers: framing, outcomes, risk thinking

Scenario: 5 days to inform redesign for diverse users

Prompt

Covers: rigor, inclusion, method choice

Scenario: Messy notes from 8 interviews

Prompt

Covers: synthesis quality

Scenario: Subscription tier change flow

Constraints

Prompt

Covers: systems, accessibility, clarity

Scenario: Dense landing page that “needs to pop”

Prompt

Covers: craft, rationale

Scenario: Stakeholder says “This looks boring”

Prompt

Covers: communication, stakeholder handling

Scenario: Growth wants a dark pattern

Prompt

Covers: ethics, maturity

Scenario: AI tools allowed

Prompt

Covers: transparency, judgment

Scenario: Engineering says it’s too complex

Prompt

Covers: collaboration, feasibility

1 — Foundational

Incomplete, unclear, misaligned

2 — Developing

Partially correct, gaps remain

3 — Proficient

Clear, complete, handles constraints

4 — Advanced

Anticipates risks, strong tradeoffs, clear judgment

Total = weighted score (max 4.0)

3.6–4.0 — Advanced

High autonomy, strong judgment

3.0–3.5 — Proficient

Reliable, scalable performer

2.3–2.9 — Developing

Capable but inconsistent

1.0–2.2 — Foundational

Needs structured skill building

Long unpaid take-homes hurt candidate experience and distort performance.

“You may use any standard design tools and AI assistants. Disclose where AI was used and what you validated independently. We evaluate reasoning and decision quality.”

Non-negotiables: