You have a stack of applicants and a handful worth a live conversation — but figuring out which handful means a week of phone screens, scheduling, and repeated intros. AI-assisted screening software replaces that first-round grind with structured interviews and assessments candidates complete on their schedule. You review transcripts, summaries, and scores in batches — and shortlist in hours instead of days.

This guide covers what AI-assisted screening software does, five platforms compared with real tradeoffs, pricing models, ATS integration, and a step-by-step rollout plan you can execute this week.

What AI-assisted screening is and when it works

AI-assisted screening (which includes asynchronous interviews and AI-resistant assessments) lets you run early-stage screening without scheduling a live call. You build a short interview — typically 3–5 structured questions — or add assessments that measure what AI can't fake, candidates complete them on their own time, and your team reviews later in batches with AI-generated summaries and match scores.

Unlike live video (Zoom, Teams), async makes the first screen repeatable and comparable across every applicant — critical when you're dealing with high volume or multiple time zones.

When to use them: High-volume roles where phone screens don't scale (retail, support, sales, ops). Distributed teams with scheduling bottlenecks. Lean hiring teams that need a structured first pass without enterprise overhead. Roles where communication or judgment matters and a resume doesn't tell you enough.

When not to use them: Late-stage evaluation where real-time problem-solving matters. Executive or highly consultative roles where nuance is best assessed live. Roles requiring interactive demos — pair programming, whiteboarding, live role-plays. And if you're not going to use a rubric, you'll just recreate the inconsistency of phone screens in video form.

Five platforms compared

Full transparency: Truffle is our product. We built it to help teams screen every applicant without phone-screening all of them — AI surfaces the signal, you make the decisions. The comparisons below are meant to be useful — but we're opinionated about structured, transcript-first screening.

Truffle

Best for: Growing teams and high-volume roles (support, sales, SDR, ops, hourly) where phone screens are the bottleneck.

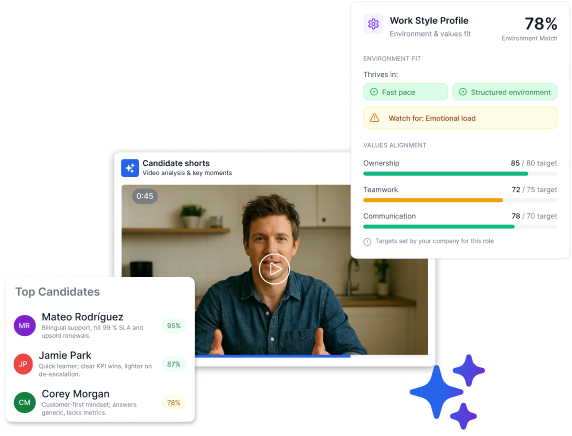

What it does: Paste a job description to generate a structured screening flow with async video interviews and three AI-resistant assessments (Personality, Situational Judgment, Environment Fit), share one link, and review with full transcripts, AI summaries, match scores with reasoning, rubric-based scoring, and Candidate Shorts (a 30-second highlight reel of key moments). Audio-first option available for faster review and to reduce weighting of appearance.

Pricing: $99/month annual, $129/month month-to-month. Free trial, no credit card.

ATS: Greenhouse and Lever natively; Workday and iCIMS via Zapier or API.

Setup: Live in 10–15 minutes.

Tradeoffs: Not a full chatbot + scheduling + sourcing suite. Focused on structured first-round screening with interviews and AI-resistant assessments — if you need a multi-module enterprise platform, look elsewhere.

Spark Hire

Best for: Teams that want classic one-way video with collaborative review and already have strong human-led evaluation habits (reviewer training, calibration, structured scorecards).

What it does: One-way video Q&A with team sharing, ratings, and evaluation basics. Solid core interviews without heavy AI processing.

Pricing: Per-seat tiers. Check limits on users, active jobs, and storage.

ATS: Common ATS integrations — confirm your specific platform and what syncs.

Setup: Fast, more manual configuration than auto-generated workflows.

Tradeoffs: At high volume, you're still building a manual review queue. Confirm how transcripts, automation, and rubric support work in your tier.

Willo

Best for: SMBs and distributed teams that want plug-and-play async screening without enterprise overhead — seasonal ops, support, frontline management.

What it does: Simple one-way interviews with quick setup, templates for recurring roles, and a candidate-friendly experience.

Pricing: Tiered SMB plans — typically by active jobs or users. Check storage and branding limits.

ATS: Some native ATS options; Zapier or API for others. Confirm what actually syncs beyond a shared link.

Setup: Fast with templates.

Tradeoffs: Integration depth varies. If your ATS lacks a native connector, plan for Zapier or manual steps.

HireVue

Best for: Enterprise TA/HR with formal procurement, security requirements, dedicated ops support, and multi-step assessment workflows.

What it does: On-demand video interviews and assessments (game-based, coding, situational judgment) as part of a broader enterprise hiring platform. Strong governance, admin controls, and documentation.

Pricing: Custom enterprise contracts. Expect a sales cycle and longer implementation.

ATS: Deep enterprise ATS connectivity (Workday, iCIMS, Taleo). Confirm scope by package.

Setup: 2–6 weeks depending on program complexity.

Tradeoffs: Built for scale and compliance documentation — heavier implementation and change management than self-serve tools. Pricing reflects enterprise positioning.

Hireflix

Best for: Small teams that want an affordable, no-fuss one-way interview step and don't need heavy AI or complex automation.

What it does: Lightweight one-way interviews — create questions, send invites, review, decide. Clean candidate flow and straightforward reviewer experience.

Pricing: Tiered SMB plans — typically by users or active jobs.

ATS: Limited native integrations. Confirm options before committing.

Setup: Fast and lightweight.

Tradeoffs: Simplicity is the feature — but confirm transcription, structured rubrics, and automation (reminders, routing) are available in your tier. "Simple" can mean "manual" at volume.

How to choose

Don’t over-research — pick the tool that matches your bottleneck, confirm it integrates with your ATS, and pilot it on one role.

Match tool to problem

If your bottleneck is phone screens and you need structured first-round screening with AI-assisted review and AI-resistant assessments, you're looking at Truffle or (at enterprise scale) HireVue. If you want straightforward video Q&A with human-led review, Spark Hire or Willo. If you just need a lightweight async step without complexity, Hireflix.

Confirm ATS integration before you commit

"Integrates with your ATS" can mean native real-time sync or a link pasted into notes. Get specific: Does it create or update candidate records in your ATS? Does completion trigger a stage change? Do scores, transcripts, and summaries write back to structured fields (not just notes)? How does it handle duplicates? If native isn't available, is Zapier or API a realistic fallback for your team?

Evaluate the review experience

The tool should make reviewing faster, not just move the queue from phone to video. Look for: transcripts (so you can scan before watching), AI summaries, structured rubrics with per-question scoring, assessments that measure what AI can't fake, batch review so you compare candidates side-by-side, and clear pass/fail signals tied to your criteria.

Protect candidate experience

Completion rates live or die on friction. Keep the total flow under 15 minutes. No app downloads, no account creation — one link, any device. Provide clear instructions upfront: number of questions, estimated time, and whether retakes are allowed. Send a confirmation on completion and no more than two reminders.

Check pricing for real costs

Most async tools price by tier — based on seats, interviews per month, active jobs, or some combination. Watch for: AI features gated to higher tiers, storage or transcript processing fees, integration add-ons, and overage charges when volume spikes. If you can't get a price without a sales call, the tool is built for a different buyer.

How to set up and run AI-assisted screening

You can go from zero to a live screening step in under an hour. Here's the workflow.

Build the screening flow

Start with 3–5 structured interview questions mapped to the role's must-haves, and consider adding assessments (Personality, Situational Judgment, Environment Fit) to measure what AI can't fake. Each question should map to a specific evaluation criterion — don't ask anything you're not going to score. Keep total time under 15 minutes with 1–3 minutes per answer and 15–30 seconds of thinking time. Allow one retake to boost completion without gaming.

For most first-round screening, audio-only is worth considering: it's lower-friction for candidates, works on low bandwidth, speeds up review, and reduces the influence of appearance on evaluation. Use video when it's job-relevant — customer-facing roles, on-camera positions, presentation-heavy jobs.

Attach a scorecard

Build one scorecard per role with the criteria that actually matter (3–5 is enough). Anchor each level with examples — "3 out of 5 on problem-solving" should mean the same thing to every reviewer. If you have multiple reviewers, score 3–5 sample responses together first to align on the rubric before scaling.

Connect to your ATS and automate invites

The ideal trigger: candidate applies → invite fires automatically → completion updates the candidate's stage → scores and transcript write back to the record. If your ATS supports native triggers, use them. Otherwise, Zapier or API can handle "new application → send invite" and "completion → move stage + notify reviewer." Set reminders at 24 and 48 hours before the deadline.

Review in batches

Review by question, not by candidate — so you're comparing apples to apples. Start with transcripts and rubric scores. Listen to or watch recordings only when you need more context on borderline cases. A second reviewer is worth adding for edge cases, not for every candidate.

Measure and iterate

After the first role, check: completion rate (if it's low, your invite or question design needs work), time per review, whether the shortlist held up through live interviews, and pass-through rates across the rubric. Adjust questions, rubric anchors, or time limits based on what you find — then template it for the next role.

Pricing: what to expect

AI-assisted screening software pricing varies by model, but the landscape for small and mid-market teams looks roughly like this:

Self-serve tools with transparent pricing (Truffle, Willo, Hireflix) offer monthly plans — typically $99–$300/month depending on tier, seats, and volume. Free trials are common. You can evaluate the workflow before committing.

Mid-market tools (Spark Hire, Humanly) price per-seat with tiers that gate features. Expect $200–$500/month for a small team. Confirm what's included versus what's an add-on.

Enterprise platforms (HireVue) run custom contracts — often five figures annually — with implementation, security reviews, and dedicated support. The sales cycle is part of the cost.

What drives cost: Seats or users, interview volume (per month or per job), AI features (summaries, scoring, transcripts), integration depth (native vs. Zapier vs. API), security and compliance features (SSO, SAML, data retention), and storage.

The real question: Does the tool save your team enough review hours to justify the monthly cost within the first few roles? For most teams hiring regularly, a self-serve tool in the $99–$300 range pays for itself quickly once you stop scheduling phone screens for candidates you could have screened in five minutes. Run the math with your own volume and current time-per-screen — the answer is usually obvious.

The TL;DR

You have a stack of applicants and a handful worth a live conversation — but figuring out which handful means a week of phone screens, scheduling, and repeated intros. AI-assisted screening software replaces that first-round grind with structured interviews and assessments candidates complete on their schedule. You review transcripts, summaries, and scores in batches — and shortlist in hours instead of days.

This guide covers what AI-assisted screening software does, five platforms compared with real tradeoffs, pricing models, ATS integration, and a step-by-step rollout plan you can execute this week.

What AI-assisted screening is and when it works

AI-assisted screening (which includes asynchronous interviews and AI-resistant assessments) lets you run early-stage screening without scheduling a live call. You build a short interview — typically 3–5 structured questions — or add assessments that measure what AI can't fake, candidates complete them on their own time, and your team reviews later in batches with AI-generated summaries and match scores.

Unlike live video (Zoom, Teams), async makes the first screen repeatable and comparable across every applicant — critical when you're dealing with high volume or multiple time zones.

When to use them: High-volume roles where phone screens don't scale (retail, support, sales, ops). Distributed teams with scheduling bottlenecks. Lean hiring teams that need a structured first pass without enterprise overhead. Roles where communication or judgment matters and a resume doesn't tell you enough.

When not to use them: Late-stage evaluation where real-time problem-solving matters. Executive or highly consultative roles where nuance is best assessed live. Roles requiring interactive demos — pair programming, whiteboarding, live role-plays. And if you're not going to use a rubric, you'll just recreate the inconsistency of phone screens in video form.

Five platforms compared

Full transparency: Truffle is our product. We built it to help teams screen every applicant without phone-screening all of them — AI surfaces the signal, you make the decisions. The comparisons below are meant to be useful — but we're opinionated about structured, transcript-first screening.

Truffle

Best for: Growing teams and high-volume roles (support, sales, SDR, ops, hourly) where phone screens are the bottleneck.

What it does: Paste a job description to generate a structured screening flow with async video interviews and three AI-resistant assessments (Personality, Situational Judgment, Environment Fit), share one link, and review with full transcripts, AI summaries, match scores with reasoning, rubric-based scoring, and Candidate Shorts (a 30-second highlight reel of key moments). Audio-first option available for faster review and to reduce weighting of appearance.

Pricing: $99/month annual, $129/month month-to-month. Free trial, no credit card.

ATS: Greenhouse and Lever natively; Workday and iCIMS via Zapier or API.

Setup: Live in 10–15 minutes.

Tradeoffs: Not a full chatbot + scheduling + sourcing suite. Focused on structured first-round screening with interviews and AI-resistant assessments — if you need a multi-module enterprise platform, look elsewhere.

Spark Hire

Best for: Teams that want classic one-way video with collaborative review and already have strong human-led evaluation habits (reviewer training, calibration, structured scorecards).

What it does: One-way video Q&A with team sharing, ratings, and evaluation basics. Solid core interviews without heavy AI processing.

Pricing: Per-seat tiers. Check limits on users, active jobs, and storage.

ATS: Common ATS integrations — confirm your specific platform and what syncs.

Setup: Fast, more manual configuration than auto-generated workflows.

Tradeoffs: At high volume, you're still building a manual review queue. Confirm how transcripts, automation, and rubric support work in your tier.

Willo

Best for: SMBs and distributed teams that want plug-and-play async screening without enterprise overhead — seasonal ops, support, frontline management.

What it does: Simple one-way interviews with quick setup, templates for recurring roles, and a candidate-friendly experience.

Pricing: Tiered SMB plans — typically by active jobs or users. Check storage and branding limits.

ATS: Some native ATS options; Zapier or API for others. Confirm what actually syncs beyond a shared link.

Setup: Fast with templates.

Tradeoffs: Integration depth varies. If your ATS lacks a native connector, plan for Zapier or manual steps.

HireVue

Best for: Enterprise TA/HR with formal procurement, security requirements, dedicated ops support, and multi-step assessment workflows.

What it does: On-demand video interviews and assessments (game-based, coding, situational judgment) as part of a broader enterprise hiring platform. Strong governance, admin controls, and documentation.

Pricing: Custom enterprise contracts. Expect a sales cycle and longer implementation.

ATS: Deep enterprise ATS connectivity (Workday, iCIMS, Taleo). Confirm scope by package.

Setup: 2–6 weeks depending on program complexity.

Tradeoffs: Built for scale and compliance documentation — heavier implementation and change management than self-serve tools. Pricing reflects enterprise positioning.

Hireflix

Best for: Small teams that want an affordable, no-fuss one-way interview step and don't need heavy AI or complex automation.

What it does: Lightweight one-way interviews — create questions, send invites, review, decide. Clean candidate flow and straightforward reviewer experience.

Pricing: Tiered SMB plans — typically by users or active jobs.

ATS: Limited native integrations. Confirm options before committing.

Setup: Fast and lightweight.

Tradeoffs: Simplicity is the feature — but confirm transcription, structured rubrics, and automation (reminders, routing) are available in your tier. "Simple" can mean "manual" at volume.

How to choose

Don’t over-research — pick the tool that matches your bottleneck, confirm it integrates with your ATS, and pilot it on one role.

Match tool to problem

If your bottleneck is phone screens and you need structured first-round screening with AI-assisted review and AI-resistant assessments, you're looking at Truffle or (at enterprise scale) HireVue. If you want straightforward video Q&A with human-led review, Spark Hire or Willo. If you just need a lightweight async step without complexity, Hireflix.

Confirm ATS integration before you commit

"Integrates with your ATS" can mean native real-time sync or a link pasted into notes. Get specific: Does it create or update candidate records in your ATS? Does completion trigger a stage change? Do scores, transcripts, and summaries write back to structured fields (not just notes)? How does it handle duplicates? If native isn't available, is Zapier or API a realistic fallback for your team?

Evaluate the review experience

The tool should make reviewing faster, not just move the queue from phone to video. Look for: transcripts (so you can scan before watching), AI summaries, structured rubrics with per-question scoring, assessments that measure what AI can't fake, batch review so you compare candidates side-by-side, and clear pass/fail signals tied to your criteria.

Protect candidate experience

Completion rates live or die on friction. Keep the total flow under 15 minutes. No app downloads, no account creation — one link, any device. Provide clear instructions upfront: number of questions, estimated time, and whether retakes are allowed. Send a confirmation on completion and no more than two reminders.

Check pricing for real costs

Most async tools price by tier — based on seats, interviews per month, active jobs, or some combination. Watch for: AI features gated to higher tiers, storage or transcript processing fees, integration add-ons, and overage charges when volume spikes. If you can't get a price without a sales call, the tool is built for a different buyer.

How to set up and run AI-assisted screening

You can go from zero to a live screening step in under an hour. Here's the workflow.

Build the screening flow

Start with 3–5 structured interview questions mapped to the role's must-haves, and consider adding assessments (Personality, Situational Judgment, Environment Fit) to measure what AI can't fake. Each question should map to a specific evaluation criterion — don't ask anything you're not going to score. Keep total time under 15 minutes with 1–3 minutes per answer and 15–30 seconds of thinking time. Allow one retake to boost completion without gaming.

For most first-round screening, audio-only is worth considering: it's lower-friction for candidates, works on low bandwidth, speeds up review, and reduces the influence of appearance on evaluation. Use video when it's job-relevant — customer-facing roles, on-camera positions, presentation-heavy jobs.

Attach a scorecard

Build one scorecard per role with the criteria that actually matter (3–5 is enough). Anchor each level with examples — "3 out of 5 on problem-solving" should mean the same thing to every reviewer. If you have multiple reviewers, score 3–5 sample responses together first to align on the rubric before scaling.

Connect to your ATS and automate invites

The ideal trigger: candidate applies → invite fires automatically → completion updates the candidate's stage → scores and transcript write back to the record. If your ATS supports native triggers, use them. Otherwise, Zapier or API can handle "new application → send invite" and "completion → move stage + notify reviewer." Set reminders at 24 and 48 hours before the deadline.

Review in batches

Review by question, not by candidate — so you're comparing apples to apples. Start with transcripts and rubric scores. Listen to or watch recordings only when you need more context on borderline cases. A second reviewer is worth adding for edge cases, not for every candidate.

Measure and iterate

After the first role, check: completion rate (if it's low, your invite or question design needs work), time per review, whether the shortlist held up through live interviews, and pass-through rates across the rubric. Adjust questions, rubric anchors, or time limits based on what you find — then template it for the next role.

Pricing: what to expect

AI-assisted screening software pricing varies by model, but the landscape for small and mid-market teams looks roughly like this:

Self-serve tools with transparent pricing (Truffle, Willo, Hireflix) offer monthly plans — typically $99–$300/month depending on tier, seats, and volume. Free trials are common. You can evaluate the workflow before committing.

Mid-market tools (Spark Hire, Humanly) price per-seat with tiers that gate features. Expect $200–$500/month for a small team. Confirm what's included versus what's an add-on.

Enterprise platforms (HireVue) run custom contracts — often five figures annually — with implementation, security reviews, and dedicated support. The sales cycle is part of the cost.

What drives cost: Seats or users, interview volume (per month or per job), AI features (summaries, scoring, transcripts), integration depth (native vs. Zapier vs. API), security and compliance features (SSO, SAML, data retention), and storage.

The real question: Does the tool save your team enough review hours to justify the monthly cost within the first few roles? For most teams hiring regularly, a self-serve tool in the $99–$300 range pays for itself quickly once you stop scheduling phone screens for candidates you could have screened in five minutes. Run the math with your own volume and current time-per-screen — the answer is usually obvious.

Try Truffle instead.