Design Assessment: Framework, Rubrics & Examples

What this design assessment covers

(and how it connects to real work)

A strong design assessment does not measure “taste” or tool tricks.

It focuses on role-relevant competencies and produces structured evidence you can evaluate consistently.

This assessment package covers 9 skill domains — usable for:

- Learning assessments (courses, training, internal upskilling)

- Hiring design skills assessments (UX, product, graphic, service)

Each domain is written as a competency evidenced through observable work.

Competency domains assessed

Problem framing & outcomes alignment

Defines the problem, success metrics, constraints, and stakeholders. Aligns work to outcomes (learning or business).

Research & insight quality

Chooses appropriate methods, avoids leading questions, synthesizes into actionable insights.

Concept generation & exploration

Produces multiple viable directions, avoids premature convergence, documents rationale.

Systems thinking & consistency

Creates coherent patterns, reusable components, predictable behaviors.

Craft & execution

Visual hierarchy, typography, layout, interaction clarity, attention to detail.

Communication & critique

Explains decisions, receives feedback, iterates, writes and presents clearly.

Collaboration & stakeholder management

Negotiates tradeoffs, manages expectations, integrates cross-functional input.

Ethics, accessibility & inclusion

Applies inclusive design practices (contrast, semantics, cognitive load), anticipates harm and bias risks.

Process integrity in modern contexts

Works effectively in remote/asynchronous settings, documents process, uses AI responsibly with disclosure.

Industry standards and terminology used

To ensure consistency and transparency, the package uses widely recognized assessment principles:

- Content validity (job/outcome relevance)

- Reliability (scoring consistency across evaluators and time)

- Structured evaluation with predefined criteria and anchors

- Candidate experience & assessment burden (time-boxing, transparency, no overload)

- Governance mindset (risk/control thinking for regulated contexts)

Why this matters:

Many resources talk about authenticity but skip practical mechanics like:

- Calibration

- Bias auditing

- Accessibility-first task design

- Integrity patterns for remote/AI-enabled environments

The Assessment Design Navigator

(two clear pathways)

The core framework stays the same. Only the stakes and task formats change.

Path A — Assessments for learning (education / L&D)

Use when your goal is:

Growth, feedback, progression, evidence of mastery

Best-fit formats

- Studio critiques with structured rubrics

- Authentic projects with milestones

- Portfolios with reflective rationale

- Peer review + self-assessment loops

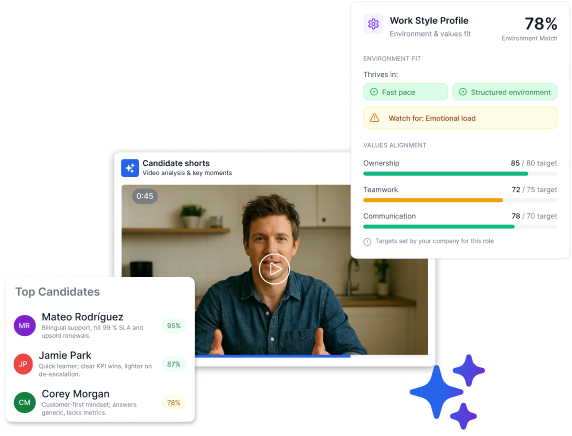

Path B — Assessments for hiring (UX / graphic / product)

Use when your goal is:

Structured comparison, consistent evidence, role relevance

Best-fit formats

- Time-boxed work sample simulations

- Structured portfolio review

- Structured interview + rubric

- Short skills checks (only when job-relevant)

Ethical note

Hiring assessments should:

- Avoid spec work

- Be clearly job-relevant

- Be time-boxed

- Use consistent rubrics

- Be transparent about expectations

The Assessment QA Framework

(6 repeatable steps)

Treat assessment creation like product design:

Requirements → Prototype → Test → Iterate

Step 1: Define outcomes / competencies

Write 3–7 measurable competencies.

Examples

- Learning:

“Learner can produce an accessible UI flow with rationale and testing evidence.” - Hiring:

“Candidate can frame ambiguity, propose solutions, and communicate tradeoffs.”

Quality check

If you can’t explain how it shows up in real work, don’t assess it.

Step 2: Choose evidence

What would actually convince you?

Evidence types

- Artifacts (wireframes, layouts, flows)

- Decision logs (tradeoffs, constraints)

- Critique responses (iteration)

- Data/insights (research synthesis)

Quality check

Evidence must be observable and scorable.

Step 3: Select task format

Match reality and constraints:

- Performance task (highest relevance)

- Case analysis (senior roles)

- Portfolio + oral defense (process integrity)

- Knowledge check (foundational only)

Step 4: Build the rubric

Use an analytic rubric with 4 performance levels.

- Criteria map 1:1 to competencies

- Include clear scoring anchors

Step 5: Run & score

Standardize:

- Time limits

- Allowed resources

- Deliverables

- Submission format

- Evaluation process & weighting

Step 6: Evaluate & iterate

Run an Assessment QA review:

- Where did strong people struggle due to ambiguity?

- Which criteria had low rater agreement?

- Did any group perform systematically worse?

- Was the time-box realistic?

Sample design assessment

(9 realistic scenarios)

Use as a question bank.

For learning → graded or formative

For hiring → select 2–3 and time-box

1) Problem framing under ambiguity

Scenario: Redesign onboarding to reduce drop-off (no data provided)

Prompt

- One-page brief: problem statement, assumptions, risks, success metrics, first 3 research steps

Covers: framing, outcomes, risk thinking

2) Research plan & bias control

Scenario: 5 days to inform redesign for diverse users

Prompt

- Choose 2 methods

- Justify them

- Write 6 interview questions

- Identify 2 bias risks + mitigations

Covers: rigor, inclusion, method choice

3) Synthesis to insights

Scenario: Messy notes from 8 interviews

Prompt

- Produce 5 insights with evidence, impact, design implication

Covers: synthesis quality

4) Interaction design with constraints

Scenario: Subscription tier change flow

Constraints

- Mobile-first

- Billing impact visible

- Screen reader support

Prompt

- Key screens + state/error annotations

Covers: systems, accessibility, clarity

5) Visual design hierarchy

Scenario: Dense landing page that “needs to pop”

Prompt

- Layout system (type scale, grid, spacing)

- Explain hierarchy decisions

Covers: craft, rationale

6) Critique & iteration

Scenario: Stakeholder says “This looks boring”

Prompt

- Clarify goals

- Propose 2 alternatives

- Define what you’d test

Covers: communication, stakeholder handling

7) Ethical decision

Scenario: Growth wants a dark pattern

Prompt

- Identify risks

- Propose ethical alternative

- Define guardrails

Covers: ethics, maturity

8) AI-era integrity

Scenario: AI tools allowed

Prompt

- Decision log: AI use, validation, changes post-feedback

Covers: transparency, judgment

9) Cross-functional tradeoffs

Scenario: Engineering says it’s too complex

Prompt

- Phased plan (MVP → v1 → v2)

- Tradeoffs + acceptance criteria

Covers: collaboration, feasibility

Scoring system

Rubric levels (1–4)

1 — Foundational

Incomplete, unclear, misaligned

2 — Developing

Partially correct, gaps remain

3 — Proficient

Clear, complete, handles constraints

4 — Advanced

Anticipates risks, strong tradeoffs, clear judgment

Weighting (default)

- Problem framing & outcomes – 15%

- Research & insight – 10%

- Exploration & concepting – 10%

- Systems thinking – 10%

- Craft & execution – 15%

- Communication & critique – 15%

- Collaboration – 10%

- Ethics & accessibility – 10%

- Process integrity – 5%

Total = weighted score (max 4.0)

Must-review thresholds (recommended)

- Accessibility & ethics < 2.5

- Communication < 2.5 (client-facing roles)

Calibration (20-minute reliability check)

- Score one sample independently

- Compare domain scores

- Discuss discrepancies

- Rewrite anchors if needed

- Re-score until variance ≈ ±0.5

Skill level interpretation

3.6–4.0 — Advanced

High autonomy, strong judgment

3.0–3.5 — Proficient

Reliable, scalable performer

2.3–2.9 — Developing

Capable but inconsistent

1.0–2.2 — Foundational

Needs structured skill building

Benchmarks

Hiring-friendly time boxes

- Portfolio + Q&A: 45–60 minutes

- Take-home simulation: 2–3 hours max

- Live exercise: 60–90 minutes

Long unpaid take-homes hurt candidate experience and distort performance.

Accessibility & inclusion checklist

- Accessible formats (Docs/PDFs, alt text)

- No color-only instructions

- Sufficient contrast

- Plain language (define acronyms)

- Multiple response modalities

- Clear accommodation process

- Don’t penalize non-job-critical communication styles

Remote + AI integrity-by-design

Allowed tools statement (recommended)

“You may use any standard design tools and AI assistants. Disclose where AI was used and what you validated independently. We evaluate reasoning and decision quality.”

Integrity patterns

- Require decision logs

- Include oral defense

- Use open-resource tasks that reward judgment

Hiring assessment ethical rules

Non-negotiables:

- Job relevance

- Time-boxing

- No spec work

- Transparent criteria

- Consistent conditions

- Respect & flexibility

- Clear closure (feedback if possible)

Quick start (60-minute team version)

- Select 3 scenarios

- Use rubric + weights

- Calibrate with one sample

- Run assessment

- Debrief with results + roadmap