Are Personality Tests Legal for Hiring? Risk Audit

What this assessment reviews (and why it matters)

This audit helps you review whether your selection process for personality testing is well-documented and more likely to be defensible. In practice, that means combining HR operations, basic industrial-organizational (I-O) psychology concepts, consistent administration, and disciplined documentation.

You’ll review your process across nine domains that map to common legal and ethical failure points in pre-employment assessments:

- Job-relatedness discipline (job analysis → essential functions)

- Can you articulate the role’s essential functions and required behaviors?

- Can you explain why each trait/scale is tied to a real job requirement?

- UGESP-informed validation readiness (evidence, not marketing)

- Do you have evidence that the tool is appropriate for your job family and intended use?

- Do you know what to ask a vendor for (and how to spot gaps)?

- Title VII risk controls (disparate treatment + disparate impact)

- Is the tool applied consistently?

- Are you monitoring outcomes for adverse impact, and do you have a documented response plan?

- ADA boundary management (avoid disability-related inquiries / pre-offer medical exams)

- Does the content drift into diagnosis territory (e.g., depression, mania, PTSD proxies)?

- Are you avoiding clinical instruments and clinical-style interpretation, especially pre-offer?

- Accommodation process maturity (accessible testing + individualized support)

- Do you have a documented method for providing reasonable accommodations?

- Are recruiters trained on how to route and handle requests?

- Administration consistency and scoring governance

- Are instructions, timing, environment, and scoring consistent?

- Are any thresholds documented, reviewed, and tied to job-related rationale?

- Candidate transparency and experience

- Are you clearly explaining purpose, use, and next steps?

- Do candidates have a path to raise concerns or request accommodations?

- Data privacy, retention, and vendor oversight

- Are you minimizing data and controlling retention?

- Is your vendor contract aligned to your obligations (security, deletion, audits, sub-processors)?

- Criticism-aware implementation (science + ethics → risk mitigation)

- Are you managing known limitations (e.g., impression management, cultural/linguistic issues, neurodiversity concerns, varying validity by role)?

- Are you treating personality results as one input rather than the sole decision point?

Industry anchors referenced:

- Title VII of the Civil Rights Act

Important: This is educational content, not legal advice. If your risk tier is Medium/High, consider consulting competent employment counsel—especially for multi-state hiring or regulated roles.

The framework: A 7-step workflow you can apply

Use this workflow whether you’re buying a vendor tool or building your own.

Step 1) Define the job (if you can’t define it, you can’t justify testing)

- Create/update a job analysis: essential functions, context, frequency, critical incidents.

- Translate to measurable competencies/behaviors: e.g., “handles high-volume customer requests calmly” rather than “is emotionally stable.”

If you cannot produce a one-page job analysis, then consider pausing personality testing until you can document the role requirements.

Step 2) Decide whether personality measurement is necessary

- Prefer skills-first tools where feasible: structured interviews, work samples, job knowledge tests.

If a trait is not tightly linked to job behaviors for this role, then avoid using it as a hard gate.

Step 3) Select the right instrument (non-clinical, job-focused)

- Avoid clinical/diagnostic tools or clinical-style scales.

- Favor occupationally designed instruments with documented technical information.

If the assessment could reveal mental health conditions, then it may raise ADA risk—especially pre-offer.

Step 4) Validate and document (UGESP mindset)

Build a minimum defensible documentation package:

- Job analysis summary

- Rationale mapping traits → job behaviors

- Vendor technical manual + relevant studies

- Local evidence plan (pilot where feasible)

- Threshold/banding policy rationale (if used)

If a vendor cannot provide a technical manual or explain intended use and limitations, then treat that as a significant risk indicator.

Step 5) Administer consistently (process control)

- Same instructions, same time limits (unless accommodated), same scoring rules.

- Train recruiters/hiring managers on what the outputs mean—and what they don’t.

If results are interpreted ad hoc (“I don’t like this profile”), then you increase disparate treatment risk.

Step 6) Provide accommodations (ADA)

- Publish an accommodation contact method before testing.

- Document requests, interactive process, and outcomes.

If you lack an accommodation process, then risk increases substantially.

Step 7) Monitor outcomes and improve (adverse impact + governance)

- Quarterly or per hiring wave: analyze selection rates by stage.

- When results are flagged, evaluate job-relatedness, administration consistency, and potential alternatives.

If you never run adverse impact checks, then you lack an early warning system.

The Compliance Risk Audit (self-scored)

How scoring works

Answer the 10 scenariosbelow. Each scenario has three options:

- A = 0 points (lower-risk practice)

- B = 2 points (moderate risk; gaps to address)

- C = 4 points (higher risk; likely weak defensibility)

Add your points for a total score from 0 to 40.

Risk tiers:

- 0–8: Lower Risk (generally better controlled; keep documenting)

- 9–20: Medium Risk (gaps that warrant fixes and review)

- 21–40: Higher Risk (consider pausing use and redesigning the process)

Red-flag areas that require urgent review:- ADA boundary

- Adverse impact monitoring

- Validation/documentation

- Inconsistent administration

10 challenging scenarios (with what stronger practice looks like)

1) You’re selecting a tool for early-stage candidate review

Context: A vendor claims their personality test “improves retention.”

- A (0): You request the technical manual, validation evidence for similar job families, sample adverse impact reporting, and confirm it is non-clinical and not designed to diagnose mental health conditions.

- B (2): You accept high-level marketing materials and references, without reviewing a technical manual.

- C (4): You purchase based on testimonials and deploy immediately as a required pre-interview step.

2) The test includes items like “I often feel hopeless” or “I hear things others don’t”

Context: You notice several mental-health-adjacent statements.

- A (0): You avoid using that instrument pre-offer and select a non-clinical, job-behavior-focused tool.

- B (2): You keep it but ask the vendor to “not report those items.

3) A candidate requests extra time and screen-reader compatibility

Context: The candidate discloses a disability only in the accommodation request.

- A (0): You engage an interactive process, provide reasonable adjustments, and document the accommodation.

- B (2): You provide extra time but have no written process and don’t document it.

- C (4): You deny it because “everyone must be treated the same.

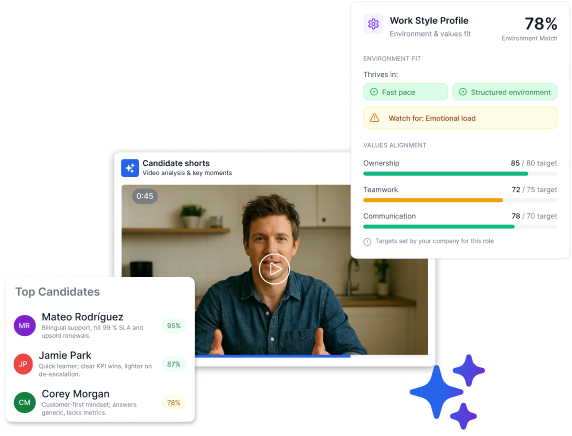

4) Managers want to see full personality profiles and make “culture fit” calls

Context: What teams often call “culture fit” is better framed as values alignment or environment fit—defined up front and applied consistently.

- A (0): You limit outputs to job-relevant competencies/behaviors, train managers, and prohibit unstructured “fit” decisions.

- B (2): You share the full report but provide guidance.

- C (4): You share everything and allow informal interpretation.

5) You use a cutoff: “Below 60 = reject”

Context: The vendor suggests a universal benchmark.

- A (0): You avoid hard cutoffs where possible, and document any threshold with job-related rationale and review.

- B (2): You adopt the vendor cutoff but plan to revisit later.

- C (4): You implement hard cutoffs with no documentation.

6) Your test is only available in English, but you hire bilingual roles

Context: Candidates report confusion about idioms.

- A (0): You ensure linguistic equivalence or use assessments designed for required language(s), and confirm any language requirement is job-related.

- B (2): You keep English-only but allow translation tools.

- C (4): You ignore complaints because “English is our corporate language.

7) You suspect impression management (“faking good”)

Context: Scores look unusually uniform.

- A (0): You treat personality as one input, incorporate work samples/structured interviews, and focus on observable behaviors.

- B (2): You add a warning (“be honest”) and keep using it as the primary gate.

- C (4): You reject anyone who appears “too perfect.

8) You’ve never checked whether the test disadvantages protected groups

Context: You don’t collect demographic data or analyze outcomes.

- A (0): You perform adverse impact monitoring by stage, apply the 4/5ths rule as an initial flag, and escalate when triggered.

- B (2): You review overall hires annually but not by stage.

- C (4): You don’t monitor because “the vendor said it’s unbiased.

9) A vendor uses AI to infer traits from video/voice (e.g., “emotion detection”)

Context: Candidates record responses; vendor outputs traits like “emotional stability” and “confidence.”

- A (0): You treat it as high-risk: verify notice/consent requirements where applicable, require documentation (including bias testing where required), provide transparency, and ensure meaningful human review and decision-making.

- B (2): You rely on vendor assurances and add a brief consent checkbox.

- C (4): You use it as an automated reject step.

10) A rejected candidate asks why they failed the personality test

Context: You want to reduce conflict but avoid overpromising.

- A (0): You provide a respectful, consistent explanation of the process (high level), the role-related competencies/behaviors assessed, and a channel for accommodations/concerns—without disclosing proprietary scoring.

- B (2): You send a generic rejection email and do not respond to follow-ups.

- C (4): You say they were rejected because they “weren’t aligned with our values/work style.

Calculate your score (0–40) and identify top risk drivers

Total points = sum of all answers.

Add red-flag markers(increase urgency regardless of score):

- Clinical/mental-health-adjacent items used pre-offer

- No accommodation process

- Hard cutoffs with no job-related rationale

- No adverse impact monitoring by stage

- Informal manager interpretation

Results: What your tier suggests (and what to do next)

Tier 1: Lower Risk (0–8) — “Maintain controls and keep documenting”

What this suggests: You have stronger process controls: job analysis, documentation, consistent administration, and a fairness monitoring loop.

Next steps (30 days):

- Create a one-page Selection Procedure File per role family (job analysis summary; purpose; mapping; admin guide; accommodation SOP; adverse impact snapshot).

- Run a quarterly adverse impact dashboard by stage.

Tier 2: Medium Risk (9–20) — “Fix gaps that can become liabilities under scrutiny”

What this suggests: You have some good practices but may be missing one of the core controls (documentation/validation readiness, ADA-safe content, or adverse impact monitoring).

Priority fixes:1. Confirm the tool is non-clinical and remove diagnosis-adjacent items.2. Collect documentation (technical manual; intended use; limitations) and complete job analysis + mapping.3. Define how outputs may be used and reduce ad hoc interpretation.4. Add adverse impact monitoring by stage.

Decision rule:- If you can’t obtain basic documentation within 60–90 days, consider pausing use as a hard gate and shifting to work samples/structured interviews.

Tier 3: Higher Risk (21–40) — “Consider pausing and redesigning the process”

What this suggests: Your process may be difficult to defend (e.g., ADA boundary concerns, undocumented cutoffs, inconsistent administration, and/or no adverse impact monitoring).

Immediate containment (this week):

- Pause automated rejects based solely on personality results.

- Remove clinical-style instruments/items.

- Centralize administration under trained HR/TA staff.

Rebuild plan (30–60 days):

- Shift toward skills-first selection (structured interviews, job-relevant work samples, competency-tied references).

- Use personality only as a supplemental input after documentation and monitoring are operational.

Benchmarks and standards: “What stronger practice looks like”

Documentation benchmark (UGESP-aligned minimum package)

A credible program can produce:

- Job analysis (essential functions + competencies)

- Trait/scale mapping to job behaviors

- Vendor technical manual (reliability/validity evidence; intended use)

- Local validation/transportability rationale

- Threshold/banding rationale (or rationale for no hard threshold)

- Adverse impact monitoring by stage

- Accommodation SOP and de-identified logs

Adverse impact benchmark (screening heuristic)

- Apply the 4/5ths (80%) rule as an initial flag.

Candidate experience benchmark

- Candidates should know: purpose, how results are used (one input vs determinative), how to request accommodations, and high-level data retention.

Red-flag question bank (what to avoid) + safer rewrites

High-risk / avoid (examples)

- “I have been diagnosed with anxiety or depression.”

- “I often feel hopeless or worthless.”

- “I hear or see things other people don’t.”

- “I am currently taking medication for my mood.”

- “In the past year I have had panic attacks.”

Safer, job-behavior-focused alternatives (examples)

- “When priorities shift quickly, I can reorganize tasks and maintain accuracy.”

- “In stressful situations, I follow established procedures rather than reacting impulsively.”

- “I prefer to clarify ambiguous instructions before acting.”

Design principle: assess observable work behaviors and job-relevant preferences—not mental health status.

Final checklist: “Before you press launch”

Job-relatedness & necessity- [ ] Job analysis completed and stored- [ ] Trait/scale mapping to job behaviors documented

ADA safety- [ ] Tool is non-clinical; no diagnosis-adjacent items- [ ] Accommodation process published and trained

UGESP-style defensibility- [ ] Technical manual and intended-use documentation on file- [ ] Threshold rationale documented (or no hard cutoff)

Title VII fairness controls- [ ] Consistent administration and scoring rules- [ ] Adverse impact monitoring by stage, with triggers and response plan

Transparency and data governance- [ ] Candidate notice explains purpose and use- [ ] Retention limits, access controls, and vendor security terms verified

If you can’t check these boxes, the question isn’t only “are personality tests legal for hiring?”—it’s whether you’re ready to use one responsibly.